Revolutionary Inventions That Disappeared For Dumb Reasons

From time to time, everyone has a revolutionary idea, one that could change the world. But then something interrupts our thought process: it's sweeps week, the kids are late for school, the boss is harping at us to get off the stupid phone. And thanks to one stupid thing, it totally face-plants. These could-have-been world-changing inventions disappeared for really, really dumb reasons.

The Apple Newton falls on its head

In 1993, nearly a decade before flat-screen tablets ran amok in the mass market, the innovators at Apple gave the world the Newton MessagePad. Packing a whopping 4 megabytes of memory, the Newton ran on its own operating system. It also used an early touchscreen with a stylus to translate handwritten notes directly into useful data, scheduling appointments and reminding you you're better off with an actual notebook.

And that was the problem. For a system marketed for its e-notepad applications, the handwriting recognition software was horrific. Gary Trudeau's "Doonesbury" famously took a week-long potshot at the PDA in which the titular character tries time and again to get the handwriting software to work, querying the device with "Catching on?" which it converts to: "Egg Freckles." The satirical misnomer caught fire in the mass media, and the once-renowned company became a laughingstock. Later models improved upon the scribble decoder, but the damage was done.

The EV1 jumps the e-vehicle gun

General Motors unveiled the first workable electric car, the EV1, in 1996. A stylish, zero-emission sedan, EV ran for roughly 100 miles between each charge, which wasn't bad given the capabilities of the era. True, there were a few complaints about its awkward steering (due to extra heft in the rear) and its short battery life. Other than that, the car had a lot of potential. So why was the car ditched in 2003?

GM cited a lack of trained mechanics who could repair the batteries, plus the car's limited drive-time (although drive time had increased) and inadequate family car applications as a two-seater. Worst, though, was production cost. It also didn't help that hybrid electric-gas vehicles were coming into the market.

Of the several thousand constructed, only about 40 EV1s survived. (GM destroyed many of them.) Sadly, it seems the EV1 was a little too far ahead of its time to succeed.

Intellivision gets played ... by itself

Everyone had that one friend who always got the latest toys, designer clothes, and state-of-the-art video game systems. For those of us growing up in the early '80s, this meant playing the short-lived but surprisingly advanced Mattel Intellivision.

"Intelligent television" pulled out all the stops for its 1979 release, with the toymaker top-loading the game station with a whopping 1456 bytes of RAM, a 2 megahertz processor, and 16-bit graphics way before Nintendo came on the scene. Users could even play "downloaded" games via the PlayCable service—sort of an early Xbox Live–with a 12-button numeric keypad controller or an optional keyboard.

Intellivision had several flaws, most notably its non-ergonomic controllers with their unintuitive button set. The Intellivision III was a perfect example of too-little-too-late and failed to wow '80s gamers. It also didn't help that personal computers dropped drastically in price. And as the video game market plummeted, it pulled Intellivision down with it.

LaserDisc barely scratches the video tape market

The LaserDisc is the ultimate example of a would-be revolutionary format. Initially developed by MCA and Philips, the first movie released in the US on LD was Jaws in 1978. The 12-inch silver platters offered better resolution than standard VHS tapes and were initially much cheaper to manufacture. The medium also allowed for a crisp fast-forward, fast-reverse, fairly seamless pauses, and watchable slow-mo.

LaserDisc did have some serious drawbacks. For one thing, it cost a crapload just to buy the player, although prices eventually fell. Later variations even rivaled modern DVDs in storage size during the mid-'80s, but most movies forced viewers to flip the disc at least once during playback, if not swap out discs. Early LDs also suffered from "laser rot," a condition that chewed up the reflective aluminum layer, causing skips and lags.

The main problem with LaserDiscs was that US distributors never really embraced them, limiting the available titles. Cinephiles and import collectors (as the discs were better supported abroad) were about the only holdouts for LaserDiscs, and it wasn't enough.

Imaginary pirates killed the DAT

First developed in the early '70s, digital audio tapes (or DATs) were a compact, high-fidelity alternative to the muddiness and single-headed, tape-chewing goodness of analog cassettes. As CDs were poised to take over for vinyl, DATs sat on the bench waiting for a shot at their analog predecessors. Offering longer recording times, digital quality, and the ability to erase and rewrite—something CDs weren't capable of at the time—DATs offered a smaller package without the chipping, scratching, and shattering problems of similar formats of the era.

But there was one major hitch: The Recording Industry Association of America, worried that bootleggers would have a field day with digital tapes, fought against them. Eventually, the RIAA backed down, once the government introduced regulations (designed to combat piracy) that limited DAT's capabilities. As a result, the tapes never made much of an impact, aside from in heavily saturated markets like Japan.

Sony finally dropped the format in 2005, although DATs are still floating around in concert bootlegger and anachronistic collector circles.

Cinerama displays its flaws across a wide screen

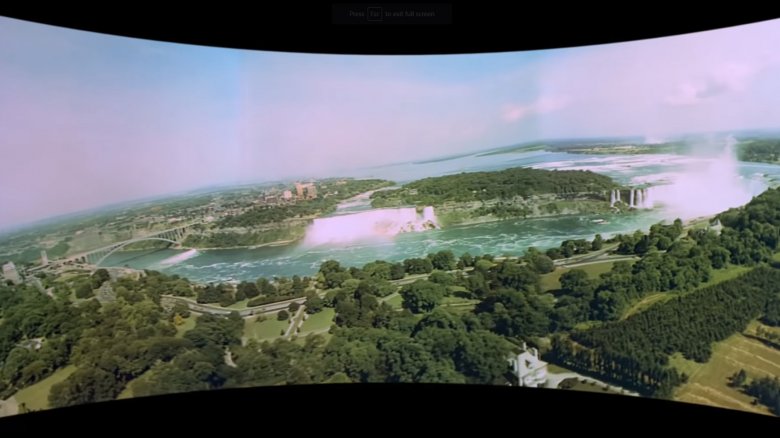

As television began to time-suck the world in the 1950s, the slightly flummoxed film industry tried to coax audiences back into the theaters with often silly gimmicks like Smell-o-Vision, 3-D, Emergo, and Sensurround, but one of these tricks wasn't as much a cheap ploy as a misfire at widescreen. Cinerama was developed the early '50s. The first film released was the self-reflexive This Is Cinerama from 1952, which impressed audiences enough for a return ticket. Cinerama made use of a bowled movie screen and three projectors to create an immersive viewing experience, much like IMAX does today. There were, however, a few drawbacks.

The projectionist was forced to work three reels at once, and if one strip of film broke, the screen went temporarily black. Plus, images looked warped to anyone not sitting around the "sweet spot" in the cinema. Cinerama filmmakers also dealt with their fair share of hassles since the rounded screen made it nearly impossible to shoot close-ups — actors talking to one another appeared to be looking past each other.

Later iterations used 70-millimeter anamorphic cameras to better effect, but Cinerama was already fading into obscurity. Today, aside from a handful of theaters around the world, the format has largely died off. Its influence did pave the way for IMAX and other widescreen processes, though.

Pay By Touch strikes a nerve

Biometrics, or a system of identification based on your biology, was once considered the way of the future for easy, secure access to just anything. What's that about ethical issues based on coding and classifying an entire populace based on biological traits? Shhh.

After collecting several hundred million dollars from backers, the Pay By Touch Company developed point of sales (POS) systems in the late '90s. Biometric credit cards offered several advantages over existing credit card systems. Not only would it be extremely difficult for others to access your bank account, but forgetting or losing your credit card would be a thing of the past. By the mid-2000s, Pay By Touch's POS system was poised to change the way we shop. But trouble was brewing for the company.

CEO John Rogers ran afoul of several lawsuits, alleging domestic abuse, sexual harassment, workplace bullying, drug binges, and mismanaging funds. The claims, the public relations nightmare, and an internal power struggle forced the company to fold in 2008. Since then, other companies have noodled around with biometrics, but the technology has yet to regain its prominence. Somehow, George Orwell is letting out a sigh of relief.

AT&T's Sceptre fails royally

WebTV was a stripped down way to browse the web and check emails from the comfort of your own television. AT&T's Sceptre was an early version of it, and this "web" connected through a cable box or telephone lines. Of course, the web wasn't really the web as we know it, but "videotex," an early interactive system with only one centralized source of information. Sceptre initially came as a kit of a central processing unit that connected to the television, an infrared keyboard, and a 1200 baud modem. Once connected to the videotex server the interactive box delivered news, e-commerce, airline schedules, program downloads, and even a chat feature, all for the low, low price of just $39.95 a month.

Naturally, there was a catch: The system required browsers to drop $600 for the Sceptre, which is a lot for a Luddite looking to break into the proto-online world. And the TV-based unit only functioned with a subscription, so you'd be shelling out a lot just to get started plus a $40 charge each month and connection fees for a handful of services. All that without any of the additional perks of early personal computers.

Eventually, the telecom giant realized the issue and expanded the service to people rocking PCs with relatively inexpensive software, but it was too late for Sceptre. AT&T took a $100 million bath.

The flight of the Concorde underwhelms

The Concorde was born during an era of flight-based optimism, when rockets were headed to the Moon and everyone felt groovy about anything airborne. A joint venture between Britain and France, the mach-capable commercial airliner first took to the skies in 1969 and got its first paying passengers in 1976. Over nearly four decades, Concorde was one of only two companies to carry passengers at supersonic speeds.

The final flight of the Concorde took place in 2003. The biggest reason for its grounding was that catching a lift on the sound barrier-breaking jet ran about $7,000, with round trips a bargain rate at $10,000. Reaching those near-1,400 mph cruising speeds also caused wall-rattling sonic booms, which were pretty unpleasant for anyone not in the plane. The Concorde was also highly inefficient, sucking over 100 tons of fuel for just one flight between London and New York, double the amount the massive 777 used. The final nails in the Concorde's coffin were a crash that killed everyone aboard and a broad slowdown in commercial flying after 9/11.

There's a light at the end of Concorde's tunnel, though. Multiple groups, including Emirates airline, are working on getting the Concorde back in the air.

Going off the rails on a maglev train

Admittedly, it's difficult to imagine a single-railed train without humming the "Monorail" song from The Simpsons. Perhaps the long-running cartoon show and its fast-talking Phil Hartman-portrayed huckster wrecked maglev trains (trains propelled by magnetic repulsion along an elevated, single-railed track) for Americans. Perhaps not. The prospect of high-speed trains in the States' car culture seems about as likely as personal helicopters.

Unlike the US, some parts of the world have embraced the maglev. Cleaner, quieter, and more efficient, it truly is a superior form of railroading. Rapid rail systems in Europe and Asia routinely reach average rates of speed between 200 and 300 mph—with a Japanese bullet train recently topping out at nearly 400 mph.

High-speed rail is political sore spot in the States, though, with car culture, as well as geographical distance, standing between a larger-scale investment in maglev trains. America also has a longtime love-hate relationship with trains, but if the US ever does embrace high-speed rail, it could gain more traction in developing nations.

Sadly, maglev lags far behind its own potential across the globe. However, repulsive transit might still has a lot of life left in it, even in the United States, where several urban areas are investigating the possibilities.