Lies They Taught You In School

We like to believe our teachers are infallible. After all, they prime our minds during our formative years, imbuing us with a knowledge base we're meant to build on for the rest of our lives. It's those years that create our core foundation for learning and our trust in educational authority figures. But contrary to what their students may believe, teachers are far from omniscient beings.

Some of what we're taught during our K-12 education is useful, and while most of us won't use our knowledge of World War II or our ability to identify mitochondria as the powerhouse of the cell in any professional capacity, at least those facts are true. Educators, unfortunately, still manage to perpetuate a surprising amount of urban myths and misinformation. It's not their fault, though. Some of these falsehoods have been widely believed for years, decades, or even centuries — much longer than they should've been. We're here to set the record straight. Here are the lies they taught you in school.

Lie: You only use 10% of your brain

We've all heard it a thousand times: "You only use 10% of your brain." The phrase leads one to believe there's a trove of hidden brainpower that could turn us into superhumans if we only buckled down and tapped into it. This, however, is a myth. If humans could only use 10% of their thought box, you probably wouldn't be capable of reading this article or tying your shoes. Lucky for you, our species is capable of harnessing a lot more of the brain's power than we were told.

According to neurologist Dr. John Henley of the Mayo Clinic (via Scientific American), people use close to 100% of their brains throughout the day. At the lowest, we might be using 10% of our brains at any given time, but even that's unlikely since the organ controls such a wide range of functions — conscious and unconscious. It has designated areas for everything from language to math to basic life support functions such as breathing. Only using the mythic 10% would make it quite difficult to breathe, walk, and talk on the phone without dying.

So while the 10% brainpower excuse might help us feel better about forgetting why we walked into the kitchen three times in a row, we have a much larger percentage of our brains to blame for our boneheaded behavior.

Lie: The first Thanksgiving marked a long, peaceful friendship with Indigenous Americans

This feel-good story was practically shoved down U.S. students' throats during primary school. The pilgrims landed at Plymouth Rock, the land was inhospitable to the newcomers, and many of them starved. Luckily for the colonists, the indigenous people felt bad for them and taught them how to grow crops on unfamiliar soil so they could share a happy celebration with a very unlucky turkey. Where much of this story is technically true, minus the turkey, it's often used a sort of trick to make children believe those early colonists shared a peaceful relationship with America's indigenous populations, and that's anything but the truth.

There isn't much historical data about that first Thanksgiving in 1621, but as you'd gather from sources such as History, the events of the festival are generally accepted as truth by most Americans, and they even led to a short-term treaty with one indigenous tribe (and only one). But many Indigenous Americans view the first Thanksgiving quite differently. According to WBUR, it's common for people of Native heritage to hold a morning fast over Thanksgiving because to them, the day marks a tainted history filled with genocide and other hardships they never would've faced if Europeans didn't take over their lands. The first Thanksgiving may have been peaceful, but it was peaceful like a motionless snake waiting to strike.

Lie: Slavery ended with the Emancipation Proclamation

Slavery is one of the worst atrocities the United States has ever seen. It's mortifying to know that Americans behaved that way toward other humans, but at least it's over ... ish. The accurate history of abolition isn't widely known. For example, and contrary to popular belief, slavery didn't end in the United States with President Lincoln's Emancipation Proclamation in 1863. According to PBS, the proclamation did free slaves ... in Confederate-controlled states. The Union states were still allowed to keep slaves mostly because Lincoln didn't want to offend them and add more allies to the bank of dissenters. Pretty messed up, huh?

Don't worry: Slavery was mostly ended with the passing of the 13th Amendment in 1865, as noted by The Atlantic, which outlawed both slavery and involuntary servitude in all forms, minus a giant, gaping loophole that's still taken advantage of today. According to the language used in the amendment, involuntary servitude is still allowed as long as it's punishment for a crime, and we've seen this tradition live on through prison labor. Many historians blame the loophole for the booming prison industry in the United States, one that disproportionately incarcerates Black Americans. Chain gangs might be a thing of the past, but prisoners forced to work under threat of punishment is still very much a reality in this country, despite the work of modern abolitionists.

Lie: It's unacceptable to start a sentence with a conjunction

Experts will likely agree that the English language is an illogical mess of several ill-fitting European languages smashed together by a hyperactive four-year-old, and the way the "rules" are taught to us through school doesn't make it any easier — mostly because these "rules" aren't actual rules at all. Take, for example, this piece of grammar advice: You can't start a sentence with a conjunction. Most of us were conditioned to nix these improper sentence-starters from the moment we put our pencils to our cat-covered composition notebooks. We were taught it, we believed it, and it's absolutely false.

There's no single authority on the English language, which is why we get things like MLA vs. APA vs. Chicago Manual of Style, and so on. Language is a dynamic beast, and as long as it does its job — namely conveying ideas from the writer to the reader — whatever you decide to do with the words is acceptable. If you need an authority to tell you it's okay to start a sentence with "and," "but," or "or," you can check out Merriam-Webster, which says we've been breaking this "rule" since at least the ninth century.

So, the next time you feel the urge to correct someone for using a conjunction to kick off a sentence, you'll be arguing against the greatest style guides in the world. And, you'll likely lose.

Lie: You can't end a sentence with a preposition

Similar to beginning a sentence with a conjunction, most of us were warned that ending a sentence with a preposition is an improper use of the English language, and there were plenty of red marks on our essays to back it up. It's probably best to forget most of what you learned in high school English class, though. Once you get to college, you'll have all of most of those rules ripped apart by a rabid TA with delusions of grandeur, anyway.

Language changes based on how it's used in everyday speech, and as we've seen with internet lingo, it can change overnight. There was certainly a time when ending a sentence with "to," "for," "of," or "with" would've made you sound like uneducated street rabble, but that time has long since passed. According to Grammarly, it's perfectly fine to end sentences with prepositions; it's simply an informal use of the language. You'll find it in virtually all written and spoken media because if you go around throwing out too many "of which"s or "to which"s, you'll sound like a pompous Victorian hipster with very few friends.

Lie: Slang, colloquialisms, and AAVE aren't 'proper' English

This one gets a little dicey. There have always been teachers who taught us that things like slang, colloquialisms, and African American Vernacular English (AAVE) don't count as "proper" English, but that teaching isn't just false — it's problematic.

Let's knock out the simple stuff first. Slang and colloquialisms are literary devices, as LiteraryDevices.net points out, used to regionalize or culturalize one's words because language just sounds better if it's relatable. You might not want to use too much slang in a term paper graded by a tenured professor at a prestigious college, but colloquial language is a good way to give your words an authentic and informal tone.

Onto the meat... Linguistics researcher Geoffrey K. Pullman's title sums it up pretty well: "African American Vernacular English is not Standard English with Mistakes." AAVE is a solid cultural English dialect spoken by many Black Americans. It's in the same boat as Creole or, you know, regular American English. The reason AAVE is often thought to be improperly spoken American English has nothing to do with the evolution of language and much more to do with subtle racism being passed into academia, through the classroom, and onto students.

Lie: Benjamin Franklin discovered electricity

One night in 1752, United States Founding Father Benjamin Franklin decided it was a brilliant idea to take a key, a bit of string, and a kite and sail them through the tumultuous air of a thunderstorm. We might categorize this type of behavior as "certifiably insane" nowadays, but Franklin was on a scientific mission he likely didn't know would go down in mythic history as the discovery of electricity. Shockingly, this famed experiment didn't lead to the discovery of electricity at all, despite what teachers have wrongly claimed for years.

Electricity wasn't exactly a new concept in Franklin's time. The world had known about it for centuries, even if they didn't yet know how to utilize it. According to Universe Today, the ancient Greeks ran experiments with static electricity. Ancient clay pots containing iron rods and copper cylinders were found in Baghdad in the 1940s, as the BBC notes, and they appear to have been early batteries, suggesting that parts of the world may have been able to harness electricity before others knew it existed.

Franklin's experiment served its purpose, though. The Founding Father wasn't setting out to discover electricity but instead demonstrate the electrical nature of lightning, which the experiment did perfectly.

Lie: The Great Wall of China is visible from outer space

Considered one of the wonders of human ingenuity, the Great Wall of China was one of the longest construction projects in history, both in physical length and length of time. It's so long, in fact, that many of us were told in school that it's visible from space. Wrong. Here's the thing about visibility: The benchmark for human vision is only 20/20, and even with the best possible human vision, which Moorestown Eye Associates claims to be 20/10, a person couldn't actually see the Great Wall from outer space.

NASA points out that this myth was so widely believed, it made its way into textbooks. Some even claim the wall is visible from Earth's moon, which is even more of a stretch. The truth is you can't even see the wall from the International Space Station with the naked eye, but it doesn't take a whole lot of work to bring it into view from there. A Chinese astronaut was able to photograph the wall with a 180mm and 400mm camera lens while on the ISS. Experts say, however, that the wall was only visible because the photos were taken under perfect conditions — the structure is no wider than a house and is a similar color to its surroundings.

Lie: You lose the most heat from your head

"Put your hat on before you go out for recess," our teachers would scold. "You lose most of your body heat through your head." None of us thought to refute it. Granted, we were young, but it made sense with everything else we were taught about heat movement. After all, heat rises, right? Well, not exactly. Heat excites air molecules and causes them to rise, but the heat itself radiates, which doesn't have much pertinence to the biological thermodynamics of the head. Unless, of course, your head is filled with hot air.

According to The Guardian, the whole "losing more heat through your head" thing is nothing more than a popular myth that likely cemented itself into society through a 1970s survival manual published for the U.S. Army. It claimed, in one line, that you lose "40 to 45 percent of body heat" from an uncovered head, and from there, the myth spread like an inconsequential disease that hat manufacturers are thankful for. As a lucky break for hatless children everywhere, two researchers at Indiana University published a study on medical myths in 2008 and totally debunked this lie. Now, they do say you're more likely to notice cold temperatures on your head and face because of the plethora of nerve endings in the area, so you'll likely be more comfortable wearing a hat in winter, but it's not a health crisis if you don't.

Lie: Newton discovered gravity when an apple fell on his head

Physics isn't the easiest subject to get young people interested in, but it's important. Besides being a necessary evil for anyone interested in a STEM career, physics is unique subject, as it's the field that studies the rules of our existence. One way teachers seem to try to get kids to pay attention in these initially dry classes is with lighthearted anecdotes, such as the famous one about Sir Isaac Newton and the apple. It goes something like this: Newton was lounging against an apple tree in his mother's garden when — plop! — one of the fruits fell and bonked him on the head, and with a bang, Newton conceptualized gravity ... except that's not how it went down.

The Independent explains how gravity wasn't exactly a new concept to Newton. He'd thought about the subject long before the falling apple. Better yet, there's no evidence suggesting the falling apple ever hit him. In fact, all accounts, of which there are few, claiming the apple sparked Newton's insight into gravity are secondhand at best. Even if the anecdote were true, it only influenced a small part of his theory, and it certainly didn't happen instantaneously. The majority of the Father of Physics' fascination with gravity stemmed from his interest in the Moon's orbit around the Earth.

Lie: Einstein failed math in high school

Some of the lies we learned in school were simple informational blunders, while others seem to be more benevolent falsities. Those white lies, whether believed or not, serve a purpose. For example, we have a famous and false anecdote about Albert Einstein. The great physics master and formulator of the theory of relativity was supposedly a poor student in high school who performed so badly in math that he actually failed the subject. Of course, this never happened.

The truth, according to The Washington Post, is that Einstein was a fantastic student who excelled from a young age in just about every subject he studied. The only known time where this maestro of relativity failed was during his first crack at taking the entrance exam for Zurich Polytechnic, and he only failed because the exam was in French, a language he wasn't very familiar with.

Regardless of whether or not Einstein actually failed math doesn't affect the usefulness of the myth. Though it may lose teachers some perceived integrity later in a student's life, the anecdote tells children that even if they fail now, they can succeed later on in life. It's heartwarming and encouraging.

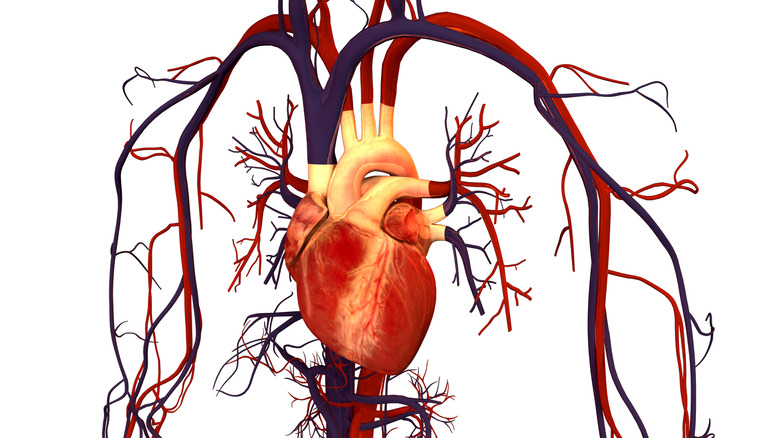

Lie: Deoxygenated blood is blue

It's easy to see why one may think deoxygenated blood is blue. If you happen to have a skin tone where your veins are easily visible, they appear blue-green through your skin, and as we all know, with the exception of the pulmonary veins, veins carry deoxygenated blood. Ergo, deoxygenated blood is blue. That at least seems to be the sort of fallacious logic that's led teachers to perpetuate this myth through the classroom. From primary school all the way through university and in every diagram of the circulatory system, we see this. In reality, all blood is red. Veins, too.

According to Medical News Today, blood is never blue, regardless of its level of oxygenation. Oxygen-rich hemoglobin makes blood appear a brighter red than the deoxygenated stuff, but it isn't a drastic enough change to shift blood to a different wedge on the color wheel. So, why do blood and veins appear blue from the outside when visible? The Australian Broadcasting Corporation explains that the false color perception comes from a mix of variables that can basically be summed up as: Red light is absorbed by lighter skin tones, while blue light is reflected. It's science.

Lie: Bats are blind

Bats' abilities to perceive the world around them are superpowers compared to humans' limited sensory capabilities. Their big ears capture reflected squeaks and clicks to paint a detailed sonic map that allows them to avoid obstacles and catch meddlesome mosquitos. Sonar is pretty useful when you're nocturnal, especially if we're to believe that bats are blind, which has been wrongfully taught in schools everywhere for as long as most of us can remember. The myth has pervaded society deeply enough to give rise to phrases such as "blind as a bat," but idioms don't have the power to make falsities true.

In all actuality, bats don't just have super hearing; they also have super light-dark vision, which National Geographic says is three times better than human vision. According to the United States Geological Survey, bats can see — yes, with their eyes — in conditions humans consider "pitch black." This is really the only type of vision they need, and it's also the only vision of theirs that's any good. It's a perfect adaptation to their nocturnal evolution. But what bats gain in light-dark vision, they lose in color sharpness. So, bats won't likely be painting million-dollar mosaics anytime soon, but they can see well enough to keep from getting tangled in your hair.

Lie: You need to drink eight glasses of water each day to be healthy

Somewhere along the line, the world decided we couldn't be trusted to keep track of our own water intake. We're bombarded with the reminder constantly, on television, from doctors, online, and — yes — in school: You need to drink at least eight glasses of water each day in order to maintain good health. You'd think that since this rule of hydration is present everywhere in society, it must be true, but no. It's a lie so insidious that even many doctors have bought into it, but it's just a myth.

As explained by MDLinx, there's never actually been a study done on how much water a person should drink each day, and that's mostly because it's not easy to calculate. Body weight, exercise, temperature, electrolyte intake, food intake, pH balance — pretty much everything affects how much water the human body needs, not to mention all the possible differences in glass size. So, if eight isn't the perfect number, how much water should a person drink every day? Exercise physiologist Dr. Tamara Hew-Butler from Oakland University explains it concisely on an episode of "Adam Ruins Everything": You only need to drink when you're thirsty. The human body was built for this, and it knows when and when not to add more liquid. Keep that in mind next time you try to choke down an extra liter before bed.

Lie: If you get too cold, you'll catch a cold

Your mothers, grandmothers, and, of course, your teachers probably warned you about spending too much time out in the cold, especially if it was both cold and wet, because you'll catch a cold from being cold. Of course, that's not how any of this works. There's a kernel of truth in the lie, but we'll get to that in a second. The common cold is usually caused by a rhinovirus or a similar culprit, and the human body can't make a virus out of exposure to winter weather. According to Winchester Hospital, viral infections are more common during winter months for a plethora of reasons that have nothing to do with your body temperature. Low humidity allows viruses to live longer outside of a host, people spend more time in poorly ventilated indoor environments, the protective mucus inside of your throat and sinuses becomes dryer and more easily infected, and so on.

Now, here's the kernel of truth, as Discover Magazine explains: Lowered body temperature wreaks havoc on the immune system and makes one more susceptible to infectious disease. So where winter weather isn't going to give you a virus by itself, it still has real consequences on the human body if you don't dress properly for outside temps, but the only health issues you're likely to find as a direct result of those cold temperatures are frostbite and hypothermia.

Pretty much everything you learned from the food pyramid is a lie

In the United States, students have been taught "proper" dietary nutrition through the USDA food pyramid since 1992. In the first iteration of the pyramid, people were expected to eat an extremely grain-heavy diet while avoiding all forms of fats and oils. Sure, have some vegetables and up to six combined servings of meats and dairy, too. Well, even the USDA realized this wasn't a healthy way too fuel our bodies, so they replaced the classic pyramid with MyPyramid in 2005. The new pyramid added activity into the mix and focused more on helping individuals tailor a healthy diet unique to them, but it was heavy on carbs and milk while demonizing all fats. This too, as it turns out, isn't great.

In truth, as Scientific American explains, the little-detailed dietary advice in all forms of the USDA pyramid leave out too much to be a comprehensive guide and focus too heavily on foods that are only good for humans in moderation. There's more than enough evidence to show that you can maintain great health while eating a low-carb diet. Fats like omega-3s shouldn't be avoided. Your sodium intake is important. Etc, etc. While the dietary information housed in USDA graphics certainly means well, modern evidence suggests the pyramids don't really know what they're talking about. You're better off keeping up with the latest research.

Lie: Thomas Edison invented the light bulb

Despite what Edison-heads may want you to believe, Thomas Edison didn't actually invent the light bulb. According to Science Focus, the first known person to successfully light up a filament with electricity was an English chemist by the name of Humphrey Davy around the turn of the 19th century. He ran current through a high-resistance wire and — poof! — there was light, but his design was nothing more than fancy science fair project. There was no way his thick wire would've been efficient on an industrial scale. Cue Warren de La Rue, another British chemist, who took the wire idea but made it of a thin, high-endurance metal and stuffed it into vacuumed glass, creating a light bulb almost 40 years before Edison. La Rue's bulb, like Davy's, would never be produced on a large scale, mostly because the filament was made of platinum.

As they say, the best lies contain a grain of truth, and this particular fib is no exception to that rule. Thomas Edison didn't invent the light bulb, but he did create the best light bulb of his time. Edison's design used a thin carbon filament and better vacuum than La Rue's design, providing the bulb with both longevity and commercial producibility. He may not have been the first person to invent the light bulb, but Edison's company is the reason we have electric lighting in our homes, even if his design is growing rapidly obsolete since the introduction of LEDs.

Lie: There are three states of matter

In the world of, well, everything, we're taught in basic science courses that there are only three states of matter: solid, liquid, and gas. We learned it first with water vapor and ice cube experiments in primary school, and it's a fact that's stuck with most of us ever us since. But what we were taught then wasn't just a mild simplification; it erased several less prevalent states of matter from popular knowledge.

As Forbes explains, there are twice as many states of matter than we were initially taught, though you're only likely to encounter the basic three here on Earth. The fourth state of matter, known as ionized plasma, occurs when atoms are pumped with enough energy to strip away their electrons and break the gaseous threshold to form a whole new state that behaves by different rules. The fifth and sixth states of matter, to our knowledge, have only been created under laboratory conditions. They're known as Bose-Einstein condensates and Fermionic condensates. Bose-Einstein condensates occur when a collection of bosons (one of two types of subatomic particles) are cooled to their lowest energy state, whereas Fermionic condensates occur when fermions (the other type of subatomic particle, which includes electrons) reach their lowest energy state and create a superfluid. Since the world is as confusing as it is interesting, it would take a major crash course in quantum physics to really understand these states further.

Lie: Humans evolved from apes

When you visit the zoo, one order of animals stands out above the rest: the primates. They look suspiciously like us. They have hands, faces, and demeanors that remind us of our favorite uncle or our nose-picking, poop-slinging best friend. (You have one of those, right?) And why shouldn't they? We are, after all, hairless great apes. We just happen to stand straighter, and some of us are smarter than our tree-climbing cousins. You were probably told in school, as most of us were, that we evolved from apes like the gorilla or the chimpanzee, but that's not really how evolution works.

About 6 million years ago, according to the Smithsonian, protohumans and apes set off on divergent evolutionary paths. We have yet to find any physical evidence of a single common ancestor, but based on genetic evidence, there should be one trapped in the fossil record somewhere. What scientists can see from tracing back our evolutionary history, though we only know bits and pieces, confirms that this ancestor was indeed "apelike." So where the claim about humans evolving from apes is partially true, it's widely misunderstood. Humans and the other modern apes share a common primate ancestor that was neither human nor anything like modern apes. Until we find the "missing link," it'll be impossible to say for sure if this creature was an ape or some sort of proto-ape deserving of its own classification. So, don't take to the trees just yet.