The Scariest Predictions That May Come True In The Next Decade

The future is scary. Anything could happen, and humanity may not be prepared for it, as a species or as a society. Look at all of the sci-fi films of the last several decades, warning about what could go wrong if a killer Arnold Schwarzenegger robot time travels back to the 80s or if a bunch of machines put Keanu Reeves in virtual reality.

While those particular examples are unlikely now, there are some things that could potentially happen in the next decade that would thrust humans into uncharted territory, leaving us all to sink or swim. Climate change is probably the biggest modern example of this. It's unclear what will happen exactly, but you know that it probably won't be good.

There are other things looming on the horizon, though, that are just out of reach right now, but are coming, much like an Arnold Schwarzenegger killer robot from the future, and if you're not ready for them, who knows what might happen.

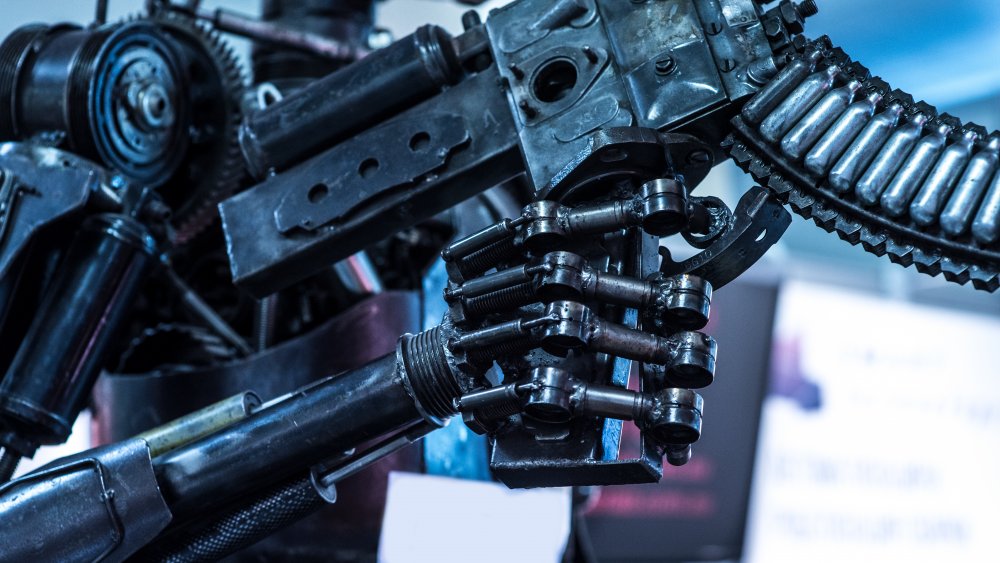

Killer robots are coming

Right now, there are drones, remotely piloted vehicles, that can be use in warfare. There is a bottleneck there that militaries across the world are eager to remove, though: the human pilot. Humans need things like food and sleep, and can generally only control a single vehicle at a time. This can be surpassed by the use of LAWs (lethal autonomous weapons), as reported by Vox. LAWs are like drones, but are completely piloted by AI and require no human intervention. Most important is the L in the name, Lethal. LAWs are authorized to kill without human oversight.

This may not sound like an awful idea on paper. Instead of sending humans to fight wars, there would basically be fighting robot contests. No humans are hurt, right? Unfortunately, it's not that simple. For one thing, once the enemy's robot army is destroyed, the humans would be next, and there's always the chance of collateral damage.

The biggest problems with LAWs come from what-if scenarios. What if they get hacked? What if a bug in their programming causes them to attack the wrong targets? What if a rogue nation or terrorist group gets their hands on them? Scariest of all, what if an army of LAWs are programmed to commit genocide? If any of these things did occur, would we be able to stop them?

Smart homes will be hacked

Ransomware has become a big thing. These malicious programs take over a computer, often threatening to delete or encrypt files, and then demand the user pay money in order to reverse the encryption or prevent the deletion. Imagine having years of work or sensitive files potentially be lost or stolen unless you fork over large sums of cash.

Now imagine this happening with your smart home. The so-called Internet of Things has made life very convenient in some ways, but it introduces a whole new world of potential downsides. According to The Economist, smart homes are a very lucrative future for malware and hacking. Say you buy a fancy new smart oven, but it becomes compromised. You effectively no longer have that oven in your house, which would make cooking, and thus life, extremely difficult. Your choices may end up being buying a new oven or paying the ransom. What if your smart air conditioner gets infiltrated in the middle of a heat wave?

Similarly, cars are getting smarter now, too. If your sweet new car gets hacked, you're looking at the possibility of thousands of dollars lost in seconds. While manufacturers can add safeguards, no system is impenetrable. Damaged cars can often be fixed, but if you buy a car with a fatal flaw in its security, a hacker or piece of malware could effectively "total" the whole line of vehicles by bricking them.

Space debris could wipe out satellites

People rely on satellites more than ever. Even in an era when satellite television and other obvious uses of the tech are on the decline, there are hundreds of other ways you're using them without knowing it. GPS is a big one, but even basic communications systems often rely heavily on satellites. Without those helpful floating machines in Earth's orbit, you'd suddenly lose a whole lot of things that you depend on every day.

New satellites are constantly being launched, but the old ones are slow to be decommissioned or otherwise cleaned up. This leads to a build up of what's known as orbital debris, and according to The Verge, this is starting to become a big concern. If you remember the 2013 movie Gravity with Sandra Bullock, she plays a NASA astronaut who is working on the Hubble Space Telescope when a missile strike on an old satellite causes a chain reaction of debris flying through orbit. This scenario wasn't made up for the film. It's a real thing, called Kessler Syndrome.

If this were to occur, there is the potential for unexpected debris that didn't burn up in the atmosphere reaching Earth, causing destruction or even death, but even more alarming is that the chain-reaction might just keep going and make it impossible to launch new satellites, as the still-flying junk might quickly destroy them.

Automation will continue to affect employment

Throughout the last century, humanity has made machines that make jobs easier, but at the cost of employment. A classic example of this is horse buggy manufacturers. Once cars became common, that industry dried up and was no longer necessary for people to get around. Improvements in workflows cause more jobs becoming unnecessary, and it's not always possible to replace them.

In 2005's Charlie and the Chocolate Factory, Charlie's father is laid off from his job putting caps on toothpaste tubes and is replaced by a machine. At the end of the film, he gets a new job servicing the machine that took his job, so it's a happy ending. Except that there were several other employees who did the same work, and only a fraction of employees are needed to service the machine. They might not even have the proper skills or training to work on the machine, like Charlie's dad did.

This is a very basic example of unemployment stemming from automation, and it's starting to become a very big problem as tech improves, according to the Brookings Institute. As more jobs are replaced by AI and machines, more people will be out of work, which does not bode well for capitalist societies, which are based heavily around employment. Some possible fixes, such as a minimum guaranteed income for all, have been proposed, but as yet, no clear solution has emerged.

Surveillance will get even creepier

Surveillance has become a daily part of life, with cameras following you in public and digital breadcrumbs showing what people do and consume every day. But things are likely to get even scarier in the near future. As noted by The Verge, AI is going to be a gamechanger in the world of surveillance. Now, not only will technology be able to track you every step of your life, but there will be AI collating it all without human oversight.

What this means is that you'll have the data and processing power to effectively watch a person 24 hours a day, and all without the need for a human to examine the data. As noted by TechCrunch, the future of privacy will likely be less about preventing surveillance and more about users consenting to how their data is used.

As an example, police in Chicago recently announced a partnership with Ring, manufacturer of video doorbells, whereby authorities can request access to videos from citizens' devices. The data is being collected regardless, but the owner has to agree to it being used by police. Whether this model is the future of privacy is anyone's guess at this point, much less whether or not average people will accept it, but full, round-the-clock surveillance for everyone is a very likely possibility in the near future.

Genetically modified viruses could wipe humanity out

While humans wiped out a great many communicable illnesses over the last century thanks to vaccines and better health practices, it is possible that some of those past diseases might come back to haunt us. With the introduction of genetic editing tools like CRISPR, humanity is moving into an era where it can customize medicine to an individual person, matching treatment directly to their genes. However, scientists are beginning to worry about the opposite: genetically modified viruses that are made to be more lethal.

Samples of eradicated deadly viruses often still exist, and with the right tools and knowledge, it is becoming more and more possible that a malicious actor could modify those and bring them back. The genetic code for many old viruses is actually readily available online. Reverse engineering these to create a new superbug would take some time and effort, but it could be done.

What's more, it's even possible to create brand new viruses from scratch using these tools. With enough skill, there might be something akin to the superflu from The Stand being made in an enemy nation's government-sponsored lab in the next few years, according to Newsweek. These viruses could even be tailored to only attack certain targets, like people with red hair or only males, making them effective for genocide.

The end of antibiotics is near

Antibiotics have been a cornerstone of healthcare. But in recent years, doctors have begun discovering antibiotic-resistant illnesses. At first, there were only a few, but now, more and more diseases are developing an immunity. So far, the response has been to encourage people to take their full course of antibiotics instead of stopping when they feel better, according to NBC News. This is only slowing things down, though. There will eventually come a time that antibiotics are no longer effective.

What will a post-antibiotic world look like? It's not entirely clear just yet, but it would be more than just not being able to get medicine for basic illnesses and infections. Humans could lose the ability to perform surgery, as doctors would be unable to fight off infection after the procedure, according to The Guardian. There are other possible antibiotics out there, medicines that kill infectious bacteria or viruses but don't destroy human tissue, but even if they were discovered tomorrow, it would take years of testing and development before they could be widely used.

Even if new antibiotics are discovered, this would likely only be a stopgap solution. Chances are, bacteria and viruses would develop similar antibiotic resistant traits to those, too. One promising possibility is genetically individualized medicine. These are still very costly, both in money and time, though, so it's several years before they'll become as ubiquitous as antibiotics.

The internet may break apart

Society has only had a global internet for a few decades and it has already changed almost every aspect of life. The innovations aren't likely to stop any time soon, either. Global communication has been a revolution in human existence. That's why the idea that it may soon disappear is so frightening. Dubbed by experts as "The Splinternet" or the "Internet Cold War," it's reaching a point where a global internet becomes a problem for countries who can't get along with each other offline, either.

Essentially, The Splinternet will be multiple concurrent internets that aren't connected, but are separated based on regions or ideologies or any other things, according to The BBC. These parallel internets will be difficult or even impossible to go between, cutting humankind off from one another and allowing for insular, more tightly-controlled networks. This is especially appealing for more authoritarian countries, but even countries that simply have different laws for dealing with things on the internet might begin to seek out alternative regional networks.

Russia is already in the process of launching their own, separate internet, according to PC Magazine. North Korea is believed to have had one for several years, and China has a de facto isolated internet thanks to the so-called Great Firewall, which blocks access to anything the Chinese government deems to be a problem. In just a few years, you might see a direct schism between the open internet and a more locked-down, authoritarian-friendly version.

Deepfakes will make it impossible to tell what's real

In these early decades of the internet, people have grown more and more accustomed to fake photos. Modified or "Photoshopped" images have created tons of misinformation, but they have limitations. Experienced artists can often see the traces of edits left behind, and modifying video has been out of reach for most everyone but Hollywood studios with a lot of computer power, artists, animators, and, of course, cash to sink into it

Deepfakes change all of that. Imagine a video where President Obama swears openly during a presidential address and drops pop culture references to Get Out and Black Panther. Turns out, you don't have to imagine it. It already exists. It's not President Obama, though, but a deepfake voiced by Jordan Peele, who does a solid Obama impression. People still struggle with not trusting images, but the next decade will find you not trusting video either.

According to USA Today, deepfakes are typically created using AI assistance along with existing footage of people. While there are some ways to detect them now (for example, the subjects in deepfakes typically don't blink), it's likely that they'll improve as time goes by. As reported by The New York Times, media companies are already preparing to create tools and try to detect deepfakes before they do too much damage, but the clock is already ticking on the first deepfake video that makes headlines across the world.

AI could enable a whole new era of scams

With the rise of the internet came an all-new world of confidence tricksters full of schemes repurposed for the world wide web. Now, thanks to spam filters and better knowledge, these cons aren't quite as effective as they used to be. But you might see the next evolution of scams coming very soon. Since conversational AI learns by examining human conversations, scientist and sci-fi author David Brin has an eerie prediction. Eventually, AI would know enough about human interaction to be able to manipulate humans.

Brin calls them HIERs (Human-Interaction Empathic Robots). These would be AI (and eventually robots) that could mimic a human well enough and exploit our natural empathy well enough to get people to do basically anything. The 2014 film Ex Machina demonstrates this exactly. Spoilers ahead, skip to the next paragraph if you've not seen the movie. In the film, the humanoid robot Ava is able to manipulate two humans into doing what she wants by exploiting their behavior toward her.

These HIERs could eventually become scammers, yes, but they could also become charismatic cult leaders or possibly even spread manipulative political ideas or take advantage of humans in ways that might be difficult or even impossible for another human to be able to pull off.

AI could be trained to be biased

In 2016, Microsoft launched a Twitter chat bot named Tay. Described as a machine learning experiment, Tay could receive tweets from anyone and it would craft a reply in response based on what it learned from talking to others. You can imagine where this went. In less than 24 hours, Microsoft took Tay offline because strangers on Twitter taught it to reply with horribly offensive racist and sexist statements, even to completely innocuous tweets, according to Gizmodo.

The problem with machine learning is that it often learns from humans, who have biases and unchecked beliefs of their own. For example, many face recognition apps have trouble distinguishing non-white faces because they often experience very few during development, according to Time. Since facial recognition AI isn't trained on dark-skinned faces, it's less able to recognize them, and may not even recognize people of color at all.

While the Tay incident shows that it's clearly pretty easy to maliciously teach an AI awful things, it's also possible that humans can unintentionally train AI to share our ingrained biases or discrimination. Police robots and AI are already in development, but police departments across the world are under increased scrutiny regarding discrimination. Imagine an AI trained during the NYPD's "stop-and-frisk" days, and how much work might be required to undo that. This is called AI discrimination, and as long as machines learn from flawed humans, it's something people need to be very careful of.

Endless corporate cryptocurrencies

Cryptocurrencies like bitcoin are still in their infancy, with prices rapidly fluctuating and little widespread knowledge about them. While they're hot in the tech industry, for those outside of it, cryptocurrency is still a mystery. But the underlying technology is extremely appealing to big tech companies, who are beginning to take notice.

Facebook is currently attempting to launch its own cryptocurrency, called Libra, in 2020, but they're only the first of several companies to invest in cryptocurrencies. Amazon, eBay, and Starbucks are just a few companies interested in the tech, according to Blockonomi. Google is perennially rumored to be starting their own currency sometime in the near future, too. Cryptocurrency is typically built on blockchain technology, where all transactions are added to a public ledger, which theoretically makes them more secure, according to Hackernoon. On top of this, controlling a cryptocurrency would mean companies could eliminate the need to deal with payment processors and banks, as they would control the entirety of the purchasing process.

The problem comes with the possibility of multiple, competing currencies controlled entirely by the companies that offer them, as noted by The Guardian. Imagine a situation like the upcoming glut of streaming service exclusives, but with currency instead, meaning you need to keep your money in multiple currencies to pay for everything. Companies could even enact measures that prevent you from moving your money back out of their currency, or devaluing it in various ways, making the future of money very uncertain.