Inside Moore's Law, The Surprisingly Accurate Prediction About Tech From 1965

Predictions about technology are usually way off the mark. As a prime example, in 1903, a New York Times reporter wrote that manned flight would likely require "the combined and continuous efforts of mathematicians and mechanicians from 1 million to 10 million years." Per BigThink, the Wright Brothers took off in their plane from Kitty Hawk just two months later.

But sometimes, forecasts do hold true. This seems to be the case with a prediction made by businessman and engineer Gordon Moore in 1965. If you've read a decent amount of tech coverage, you've probably come across references to "Moore's law." Many articles describe it as the prediction that the processing power of humanity's best computers will double every two years. (More extreme articles use it to suggest that an AI apocalypse must be just around the corner.)

In reality, Moore's law is more specific than this — and more technical. Moore actually predicted that the number of transistors in a microchip will double every two years. Now, to understand that, you'll need to know the meaning of the terms "microchip" and "transistor," and what these electronic components do. That may sound dull, but understanding these terms will help you better grasp the meaning of Moore's fascinating prediction, and why the prediction has managed to (mostly) hold true for over 50 years. Before we get into the weeds (or the wires) of explaining the techno-jargon in Moore's law, let's consider the history behind Gordon Moore's famous prediction.

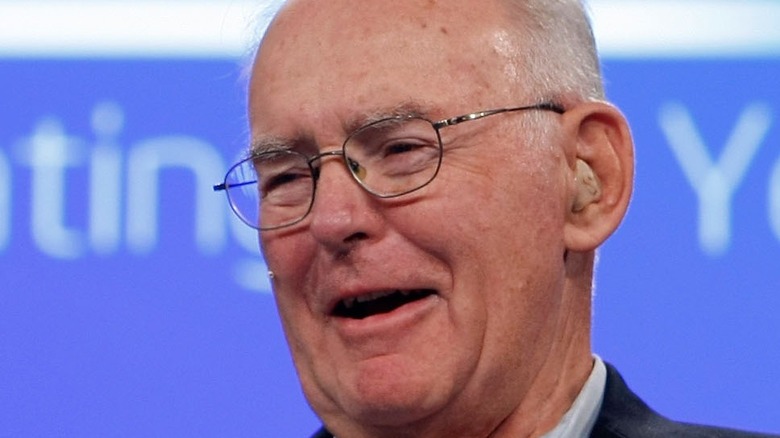

Intel co-founder Gordon Moore made his famous prediction in a 1965 article

According to the Encyclopedia Britannica, Gordon Moore received his PhD in chemistry and physics from CalTech in 1954. In the years that followed, Moore worked for companies that manufactured transistors for early computers. Then, in 1968, he co-founded his own: Intel. (Maybe you've heard of it?) Today, Intel is one of the biggest producers of microchips in the world, surpassed only by Samsung since 2021, per SmartCompany.

A few years before he founded Intel, the journal Electronics asked Moore to write an article laying out his thoughts surrounding the future of the electronics industry. In 1965, Moore revealed his now-famous prediction in an article titled "Cramming more components into integrated circuits" (posted at the Intel Newsroom). (If you're like us, you probably know "integrated circuits" by their more basic name, "microchips.") In that article, Moore noted that the number of transistors that could fit on a single microchip was doubling roughly every year, and he predicted that this trend would continue. Per Britannica, Moore revised this prediction in 1975 to a slightly lower rate — a doubling every two years — which is the version of Moore's law best known today.

Moore's law is just one man's prediction, not some fundamental law of nature. It's a "law" that, in any given year, could certainly be proven wrong. But, as we'll see, Moore's law has held surprisingly true over the decades since he made his prediction.

Moore's law predicts an exponential increase in the number of transistors that fit on a microchip

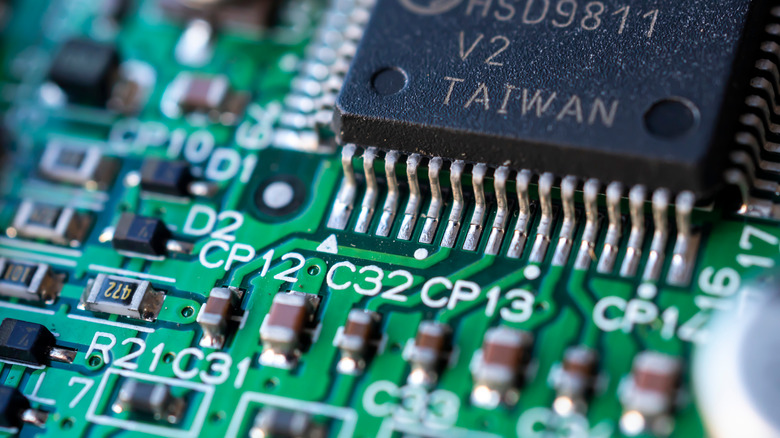

Before we look at the accuracy of Moore's law, it's time for the "fun" part: understanding just what Moore meant when he spoke about transistors and microchips. Based on Moore's prediction that the number of transistors on a microchip will double every two years, you've probably inferred that a microchip is some important component of a computer, and a transistor is an even more basic building block that makes up a microchip. If so, you'd be correct.

You can think of a microchip as the tiny, beating heart inside each computer. Per ThoughtCo, microchips are networks of tiny electronic components arranged on a small metal chip. These collectively regulate the flow of electric current in a computer, allowing the computer to perform logical functions. (So, in a sense, the brain may be a better analogy than the heart; a microchip's components working together to regulate current flow is a bit like how the neurons work together in the brain.)

As Britannica adds, the earliest microchip was developed in 1958. Today, there are a few types of microchips (ranging from a centimeters to a few millimeters in length), but they all generally serve as the electrical basis for computers' logical computations. Microchips are so important (and so ubiquitous) that an ongoing chip shortage has made loads of goods more expensive — from cars to PlayStations, as CNBC reports.

Microchips are the beating heart of computers, and transistors allow them to function

Transistors are even more fundamental — they're what allow microchips to function. Programmable computers need components that can make electric currents switch between "off" and "on." (The 0s and 1s of code represent these "off" and "on" states.) As Popular Mechanics explains, early computers used vacuum tubes as the tool that would switch a current on and off. But vacuum tubes are glass cylinders; they're big and fragile. For computers to reach their true potential, a much smaller tool was needed.

Along came the transistor. Per Encyclopedia Britannica, the earliest transistors were developed at AT&T's Bell Laboratories in 1947. These transistors were tiny, metal, pin-like devices. Compared to vacuum tubes, transistors offered a much smaller and much more efficient means to switch electric currents on and off. As a result, transistors quickly replaced vacuum tubes as the basis of computing — allowing computers to become much smaller and more powerful. (Just look at your phone, compared to a 1950s-era supercomputer.)

For transistors to work well, though, you need a lot of them arranged in sequence. That's essentially what a microchip is: a bunch of transistors working together on a tiny metal chip where more transistors equals greater processing power. Gordon Moore predicted that, as time went on, the number of transistors we could fit onto a single microchip would increase exponentially — a doubling every two years. Was Moore right?

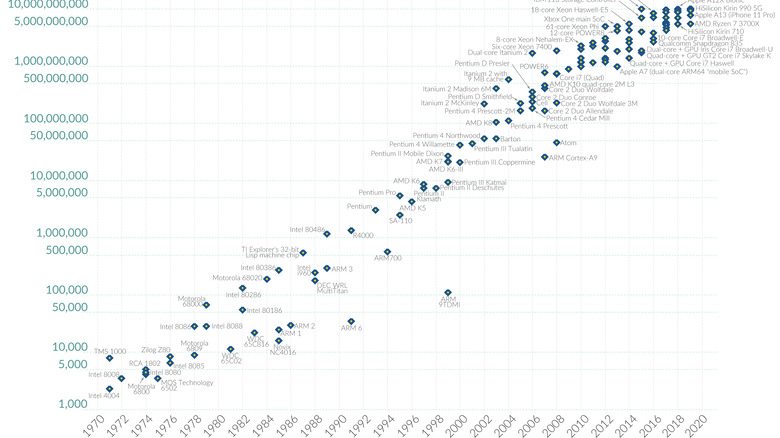

As transistor counts have grown, Moore's law has proven largely accurate

At the core of Moore's prediction was the assumption that transistors would get smaller over time. (The smaller the transistor, the more you can pack onto a single microchip.) This assumption has certainly proven correct. Per MIT Technology Review, the earliest commercial transistors were about a centimeter long. Today, they're about two nanometers — around 5 million times smaller.

Naturally, this shrinkage has led to an increase in the number of transistors per microchip. As Intel writes, the Intel 4004 — the earliest commercial microprocessor — had 2,300 transistors on its chip. Today, the most state-of-the-art microchip, manufactured by Cerebras Systems, has around 2.6 trillion transistors, SingularityHub reports.

Sure enough, the path from thousands to trillions of transistors roughly followed Moore's prediction of a doubling every two years. But not perfectly. As you'll recall, Moore's initial prediction was a doubling every single year, which he later adjusted to every two years. As it turns out, the actual growth rate has been in the middle. Per Britannica, microchip transistor counts have doubled roughly every 18 months over the past 50 years. All in all, that's a pretty solid estimate from Gordon Moore, and it explains why his prediction is so famous among tech enthusiasts. As far back as 1965, Moore was able to accurately foresee the ever-shrinking size and ever-growing processing power that would characterize the tech industry for the next five decades.

It's unclear if — and when — Moore's prediction will be broken

Over the years, tech companies like Intel have used Moore's law to set goals for their engineering teams, as TechRadar shows. In that sense, one might wonder if Moore's is actually a self-fulfilling prophecy — a prediction about the tech industry being reinforced by the tech industry. That's a question worthy of its own article, so let's end on a different one: just how long is Moore's law going to remain accurate?

As MIT Technology Review points out, making smaller transistors has gotten harder and harder over time. So far, the tech industry has managed to keep finding new fabrication strategies — like carving transistors out of silicon using ultraviolet radiation. But, as we go smaller, these strategies become increasingly expensive. Some day, our miniaturization methods might run out — or become prohibitively costly. (MIT Tech Review notes that the number of companies willing to work on developing state-of-the-art microchips has declined over the years, to just three; in 2002 there were 25.)

Other experts say it's time to stop worrying about Moore's law altogether. Rather than increasing transistor counts, they argue that we should focus on developing algorithms that can use the transistors we already have more efficiently. Per MIT Tech Review, some advocate coding in C — which is a more challenging programming language than competitors like Python, but also requires far fewer computations. For now, it's unclear which side — those that emphasize expanding our hardware or those that prioritize efficient software — will win out.