Inventions That Actually Made Lives Worse

The fossil record tells us that approximately 2.6 million years ago, early humans realized that there was merit in using stones for hammering, cutting, and other tasks crucial for their survival. These stone tools can be considered the first known inventions. Since then, humanity has kept on inventing — and the steady march of technology has enabled us to craft solutions for increasing food production, making incredibly fast calculations, traveling to faraway places, overcoming diseases, and many other challenges. Though they tend to be the first examples that come to mind, inventions aren't limited to just actual tangible products; an invention can be a previously unheard-of idea or a new, more efficient way of doing things, and may also build upon existing inventions.

That said, even when they are created with the best of intentions, inventions can be a double-edged sword. Some provide powerful and effective solutions while using up nature's finite resources; others may efficiently address pressing problems while creating new and unforeseen ones. Thalidomide, for example, was initially deemed safe for pregnant women to take for morning sickness and better sleep; later research revealed that over 10,000 babies were either born with severe developmental problems or died mere months after birth, prompting radical shifts in drug testing and regulation.

Many other cases of innovations-gone-wrong exist, each of them reinforcing an important lesson: When misused, overused, or poorly executed, even the most helpful inventions may come with unexpected detrimental costs — or worse, do significantly more harm than good.

Nuclear weapons

The development of nuclear weapons came about due to the discovery of nuclear fission, in which a tremendous amount of energy is released when an atom's nucleus splits. The United States was the first to successfully harness this for warfare, and remains the only country to have used nuclear weapons in combat.

Unsurprisingly, as soon as the world witnessed what a nuclear missile strike really does, a handful of powerful nations raced to develop their own nuclear weapons technology; meanwhile, the rest of the world called for the total elimination of nuclear weapons, deeming them too dangerous to exist. China, France, India, North Korea, Pakistan, Russia, and the United Kingdom have formally acknowledged the existence of their own nuclear weapon programs. South Africa developed its own nuclear weapons in the 1970s, but dismantled the program completely by 1991, the only country to do so.

Much has been written and studied about the catastrophic and well-documented effects of nuclear weapons testing and deployment, not just on human life, but also on the environment and other organisms on the planet. And the 2025 Israel-Iran conflict — in which the former struck the latter's military facilities as a "preventive" measure against the construction of a nuclear bomb — is proof that even the mere idea of nuclear weapons existing is enough to disrupt the uneasy peace that they supposedly help maintain. (By the way, here's how to survive a nuclear attack. Just in case.)

Trans fats

In the early 1900s, scientists discovered that through the addition of hydrogen, liquid vegetable oils are able to stay in a semi-solid state, even when they're not frozen or refrigerated. This method, called partial hydrogenation, made it possible for manufacturers to extend the shelf life of food products while improving their appearance, mouthfeel, and taste. Coupled with the fact that ingredients containing partially hydrogenated oil (e.g., margarine) were cheaper to use than butter or lard, they quickly became a standard addition to many commercial baked goods and snacks.

However, since partial hydrogenation does not affect all the chemical bonds in a particular oil, the process creates trans-fatty acids (or trans fats). By the 1990s, researchers found and established some disturbing connections between the consumption of trans fats and a number of serious health conditions, including elevated low-density lipoprotein (LDL) cholesterol (which raises one's risk of cardiovascular disease), obesity, and diabetes. Moreover, highly processed food products that contain partially hydrogenated oils also tend to be high in calories, sugar, fat, or sodium, compounding the potential health risks that come with consuming them.

In 2015, the Food and Drug Administration declared that trans fats are "generally not recognized as safe," and that food manufacturers should avoid using them in their products. However, despite intensified campaigns from the World Health Organization and other concerned groups, trans fats have yet to be completely eliminated from store shelves.

Artificial lighting

Can you imagine a world completely devoid of electric lighting — or artificial lighting, for that matter? There's little doubt that the invention and spread of artificial lights — from the coal-gas-powered lights of the 1700s to today's fluorescent lamps and light-emitting diodes (LEDs) — transformed the way society works and functions. The night no longer had the power to bathe the streets in complete darkness, making it possible to perform tasks even at nighttime or reduce the threat of criminal activity at late hours. However, research has revealed that the increased flexibility and improved security offered by artificial lights come at a cost to the environment, to other species, and even to ourselves.

In recent times, light pollution has disrupted our body clocks and sleep-wake cycles, as well as diminished our ability to see and appreciate the stars in the night sky. According to a 2015 review of 85 articles published in Chronobiology International, exposure to artificial light at night (ALAN) outdoors has been observed to increase a person's risk of breast cancer; meanwhile, "chronic" ALAN exposure can have serious effects on the brain, heart, and metabolism.

Lastly, a 2018 article in Ecology & Evolution explored the different ways that ALAN negatively affected nocturnal insects, including throwing their navigational capabilities out of whack, making them more susceptible to predation, and even blinding them.

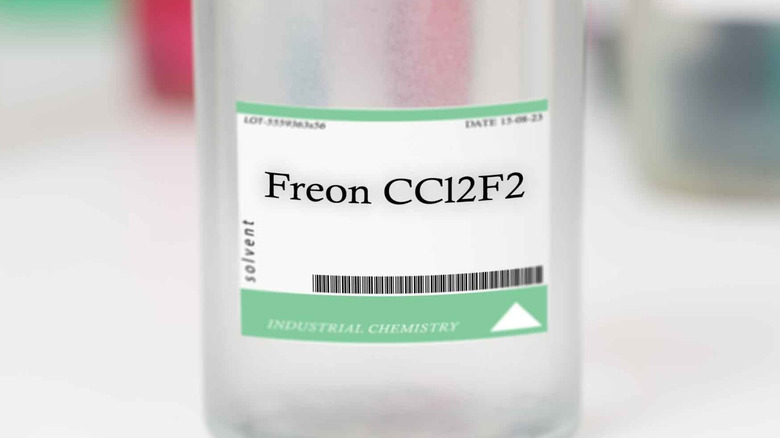

Chlorofluorocarbons

During the early 1900s, home air conditioners and refrigerators relied on refrigerants like ammonia, sulfur dioxide, and propane; chemicals that, in the event of a leak, would be potentially lethal. A team of corporate-funded researchers (including a chemical engineer named Thomas Midgley, Jr., who also invented leaded gasoline) sought to find a safer alternative; the result was a type of CFC called dichlorodifluoromethane (Freon-12).

Freon-12 quickly became the refrigerant of choice for many American home appliance manufacturers, and the only coolant permitted in American public buildings. Moreover, the "safe" reputation and relatively low cost of production prompted companies to use CFCs as propellants for products in aerosol cans (such as hair spray, paint, and so on). By the 1970s, companies worldwide were reportedly producing almost a million tons of CFCs annually. But around the same time, scientists discovered the unexpected downside to using CFCs. Once CFC molecules reach the stratosphere, ultraviolet radiation from the sun breaks them down, setting off a destructive chain reaction that could deplete the ozone layer, Earth's invisible shield from harmful solar radiation.

The smoking gun was a 1985 paper in Nature that revealed a hole in the Antarctic ozone layer, attributed to the increased adoption and use of CFCs. Two years later, the Montreal Protocol was established, aiming to eventually phase out CFCs from manufacturing. Sadly, the problem is far from over: In a 2023 paper in Nature, researchers noted a worldwide increase in CFCs between 2010 and 2020.

High-fructose corn syrup

Despite the fact that they're both derived from corn, corn syrup and high-fructose corn syrup have one key difference. Corn syrup primarily gets its sweetness from glucose, which is less sweet than table sugar (sucrose). Thus, food manufacturers seeking a cheaper sugar substitute add enzymes to it to convert part of its glucose into fructose, which is sweeter than both glucose and sucrose. This is why you're likely to find high-fructose corn syrup listed among the ingredients of many pastries, sodas, juices, and condiments at the supermarket.

However, scientists warn that consuming too much of this sweetener can have unhealthy consequences. According to registered dietitian Kate Patton, high-fructose corn syrup can prompt increased fat production in the liver. "Our body can only absorb and use a certain amount of fat. So, the rest of it gets stored as triglycerides (a form of fat in your blood) or as body fat," shared Patton (via Cleveland Clinic). This buildup of fat has been associated with an increased risk of cardiovascular disease and diabetes, as well as elevated blood pressure and cholesterol levels. Authors of a 2017 paper in PLoS One also observed a link between high-fructose corn syrup consumption and metabolic disorder and impaired dopamine function.

With that said, more research is needed before we can be certain about high-fructose corn syrup's negative health effects. But its ubiquity in highly processed foods, alongside ingredients of dubious nutritive value, makes it hard to consume in moderation to begin with.

Fast food

Regardless of how many disturbing fast food restaurant secrets you're aware of, one thing's for sure: fast food is unlikely to be on your (or anyone else's, for that matter) list of foods that can be considered healthy. But perhaps the most disturbing fact about fast food isn't that it's generally unhealthy — it's that despite knowing this, we just can't seem to stop consuming fast food.

Having a virtually limitless selection of tasty, competitively priced meals served hot and fast is a godsend for many a busy professional, cooking-averse homebody, or hungry teenager. But research shows that the high amounts of calories, sugar, sodium, trans fats, and other less-than-healthy components in fast food can cause a wide range of health problems, including (but not limited to) high blood pressure, elevated blood sugar, inflammation, weight gain, deterioration of the gut microbiome, and nutrient deficiencies, as well as an increased risk of cardiovascular disease, dementia, diabetes, and different kinds of cancer.

There are also other negative effects of the popularity of fast food that don't get brought up as much. Take fast food packaging waste, for example: experts estimate that food delivery and to-go meal packaging make up a significant chunk of the millions of metric tons of plastic waste generated in the United States alone. And while fast food tends to be an inexpensive option, this comes at the (literal) cost of healthcare: over $50 billion per year, according to a 2019 study in PLoS Medicine.

Synthetic plastics

Synthetic plastic (or just "plastic") first arrived in the early 20th century. Manufacturers were searching for a new raw material to replace the finite natural resources (e.g., ivory, horn, tortoiseshell) they had been using. The product of subjecting specific chemicals to intense pressure and heat, plastic quickly gained popularity as a durable, easy-to-produce, and cost-efficient option for mass-producing everything from appliance parts to decorative pieces. Decades of research led to new formulations of plastics, paving the way for new plastic products. It soon became clear that plastic was here to stay; unfortunately, this also turned out to be true in the literal sense.

Plastic products are among the things that take the longest to decompose. It takes an average of two decades for a plastic bag to break down; meanwhile, plastic toothbrushes, diapers, and coffee pods can last for up to 500 years. And as the plastic waste accumulates in oceans, forests, and rural and urban areas, they disrupt entire ecosystems, harm wildlife, and cause detrimental effects on human health. Even the creation and incineration of plastic are threats to planetary health, as these drive climate change. Worse, the problem doesn't end when plastic products break down.

Microplastics, tiny particles invisible to the naked eye, end up everywhere: in our water supply, inside our bodies, and even as far as Antarctica, a place with no permanent human populations. (Not all hope is lost, though. Read about how tiny mealworms may solve our gigantic plastic problem.)

Leaded gasoline

If you ever feel that, despite having the best of intentions, you seem to keep making things worse, just remember the story of Thomas Midgley, Jr., an inventor whom New Scientist gave the unfortunate moniker "one-man environmental disaster." Aside from his involvement in the invention of CFCs, the chemical engineer was also the brains behind leaded gasoline.

In the 1920s, Midgley, an employee of General Motors, pioneered the process of adding tetraethyl lead to gasoline as a means of improving vehicle performance and eliminating the knocking sound in engines with poor combustion. Despite concerns from scientists, medical professionals, and lead experts, Midgley insisted it was safe, claiming that "the average street will probably be so free from lead that it will be impossible to detect it or its absorption" (via BBC). Due to a lack of empirical evidence about its potential dangers — and in spite of lead's toxicity being well known at this point — leaded gasoline was rolled out and quickly embraced by the world.

But subsequent research proved his assertions wrong. Emissions from cars running on leaded fuel had actually been making people seriously ill, being a literal poison gas that affected human health at a city-wide scale. Children who were exposed to leaded gas were also observed to have developmental problems, including impaired cognitive function. Over the next few decades, countries began banning the use of leaded gasoline for road vehicles, with the last one being Algeria in 2021.

DDT

Despite being first synthesized in 1874, the usefulness of dichlorodiphenyltrichloroethane (DDT) as an insecticide only came to light in 1939 due to the research of Paul Hermann Müller, a Swiss chemist. Introduced to the public as a powerful insect killer, it gained popularity among farmers and households alike; it saw widespread use during World War II, and remained the insecticide of choice for many Americans in subsequent decades. Due to its tremendous impact on drastically reducing incidences of insect-borne diseases such as yellow fever, typhus, and malaria, it was marketed as a "benefactor of all humanity," even winning Müller the Nobel prize in Physiology or Medicine in 1948.

But as it turned out, DDT wasn't just deadly to insects; it posed lethal dangers to other animals and humans, too. It was found to be highly toxic to marine life and birds, greatly contributing to the near-extinction of species such as the bald eagle and peregrine falcon. Moreover, it was found to have severe negative impacts on the development of unborn children, and could even increase the risk of certain cancers. And because it is non-biodegradable, it stays in the body, hiding in fat reserves.

Armed with this knowledge, conservationists rallied against the continued use of DDT as an insecticide. Chief among them was American biologist Rachel Carson, whose 1962 book "Silent Spring" drew attention to the perils of using the synthetic chemical. A decade after the book's publication, the U.S. banned DDT for agricultural use.

Social media

It's hard to imagine a world without social media; humanity has come a long way from email and 1970s USENET chat groups. Now, social media platforms enable online users to build their professional portfolio, reconnect with high school friends they haven't seen in 20 years, or share videos of their 10-second brain farts. Social media played a particularly critical role in the COVID-19 pandemic, making it easier for people to maintain relationships despite social distancing, market their goods and services, and get important news about the outside world.

But as anyone who has ever had a social media account would tell you, things aren't always sunshine and rainbows in the world of likes, shares, and absurd dance challenges. These platforms are rife with trolls — users who pick fights or write incendiary comments specifically to enrage other users and get reactions from them – and spreaders of misinformation. A 2023 study published in Computers in Human Behavior acknowledged that misinformation is a problem "as old as human history," but noted the game-changing impact of social media on just how easy it is to create and spread falsehoods to as many people as possible.

Social media may also have a negative impact on the mental well-being of young adult users. Authors of a 2023 review in Cureus found a link between social media usage and depression in young adults, as well as anxiety and stress. (Read about the most dangerous social media photos ever taken.)

Cigarettes

The practice of recreational tobacco use has been around for thousands of years, predating the emergence of the modern-day cigarette. But with the invention of cigarette-rolling machines in the late 19th century, smoking experienced a subsequent boom in popularity. Aside from the increased efficiency of mass-producing cigarettes, the lack of rigorous scientific studies at the time about the health harms of smoking enabled manufacturers and marketers to promote cigarettes as a healthy, manly, and largely safe activity.

By the mid-20th century, however, the growing mountain of evidence about smoking's ill effects on human health was becoming harder to ignore. In 1964, the U.S. Surgeon General released a report firmly establishing smoking's role in significantly increasing the risk of lung cancer; it even noted that smokers had a 70% increased mortality rate compared to people who did not smoke. In recent times, much has been said about the connection between smoking and negative health effects on virtually every part of the body, based on decades of scientific studies. Yet experts estimate that each year, more than 480,000 people living in the United States die as a direct result of smoking or exposure to secondhand smoke, the latter a phenomenon experienced by nearly a quarter of the global population.

It's also worth noting that e-cigarettes, a fairly recent invention to help smokers quit, have been observed to cause health problems themselves, prompting the Centers for Disease Control and Prevention to state, quite plainly: "No tobacco products, including e-cigarettes, are safe."