These Software Glitches Destroyed Lives

"Technology gone wrong" is a popular trope in science fiction. In real life, when highly advanced software behaves in ways it was not intended to, it can sometimes lead to positive results for the flesh-and-blood humans who are there to witness it (like that time when a video poker software bug landed two men hundreds of thousands of dollars). More often than not, however, when we hear about software glitches, they typically have disastrous, or even fatal, consequences.

It's important to remember that not all software errors are the result of glitching. While glitches tend to manifest in unexpected situations and are almost always beyond the user's control, some software errors happen on purpose, with nefarious intent (like some of the biggest and most famous hacks in history). With that said, here are some of the most catastrophic software glitches of all time, from day-to-day automation systems that caused staggering financial losses to otherwise safe medical procedures becoming the very cause of patients' deaths.

Therac-25 radiation overdoses (1985-1987)

When it comes to dangerous software glitches in medical equipment, few are as infamous as the Therac-25. The radiation therapy machines, created by Atomic Energy of Canada Limited (AECL), experienced a series of malfunctions that led to at least six cases of radiation overdoses in different hospitals. In a nutshell, the machines' software allowed unsafe combinations of operating modes to be set during rapid keystrokes. On several occasions, the machine delivered extremely high doses in a fraction of a second, producing radiation burns and fatal injuries.

The first incident took place in June 1985 at the Kennestone Regional Oncology Center in Marietta, Georgia. The 61-year-old patient, a woman receiving post-op electron treatment, received burns so severe that her breasts had to be removed; her right arm was also rendered useless. Just a month later, a 40-year-old woman at the Ontario Cancer Foundation in Hamilton, Canada, was bombarded with up to 85 times the regular amount of radiation in a single treatment session. In December 1985, a woman at the Yakima Valley Memorial Hospital sustained radiation burns from the machine, though she eventually recovered. Sadly, the patients in the next three documented cases (one at the same Yakima hospital in 1987 and two at the East Texas Cancer Center, both in 1986) didn't survive their ordeals.

Interestingly, previous Therac models had hardware safety mechanisms in place as preventive measures — safeguards that were not replicated in the Therac-25 machines, whose safety features relied solely on their software.

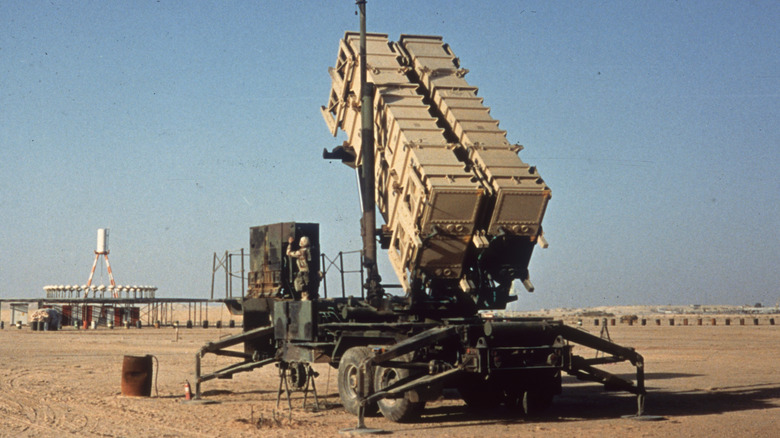

Patriot Missile System time-drift bug (1991)

One of the messed-up things that happened during the Gulf War took place on February 25, 1991. A Patriot missile defense system at Dhahran, Saudi Arabia, failed to intercept an incoming Iraqi Scud missile. The projectile struck a U.S. Army barracks, killing 28 soldiers.

The system had been operating continuously for more than 100 hours, exposing a software defect in the weapon control computer's time conversion. Because time was kept as an integer and converted to a real number with only 24-bit precision, the range gate calculation became less accurate the longer the system ran, affecting where and how the radar "looked" for the target. This floating point conversion error caused the Patriot's system clock to drift by just a fraction of a second during its non-stop, days-long runtime. And so, on that fateful day, the accumulated error had become large enough that the system searched the wrong location, and was thus unable to engage the incoming projectile. Per the investigators, periodic rebooting would have re-initialized the clock, and thus could have mitigated the problematic drift; however, the effects of the extended runtime were not adequately anticipated prior to the incident.

The Army had actually begun distributing a software fix days earlier, after studying data from the field and realizing that after eight hours of continuous operation, the system's accuracy already began to falter. Unfortunately, the corrected software only reached Dhahran a day after the fatal strike.

Radiation planning software glitch in Panama (2000)

A software glitch sometimes manifests when the person operating the machine wants it to perform a function it wasn't actually designed for. Such was the case in the radiation therapy planning incident that took place in November 2000 in Panama City's National Cancer Institute (pictured).

A computerized treatment planning system (TPS) created by Multidata Systems International was programmed to allow only four radiation blocks (metal shields meant to prevent radiation from affecting healthy tissue in patients) to be placed. Allegedly, a radiation oncologist wanted an extra block in place; the doctors found a workaround for this limitation by "drawing" the blocks as if they were a single block with a central opening, instead of separate blocks (which is the standard procedure). Because the program didn't display a warning error for improper data input, the staff likely assumed that this was okay. Sadly, they failed to take into account how the program would calculate the radiation dose following this input; even a minor change in the direction of the hole could easily multiply the recommended radiation dose.

By the time they learned that the patients had been bombarded with too much radiation, it was too late. As of 2003, 21 patients had died; 17 of them were connected to radiation overexposure. Eventually, criminal charges were brought against the machine operators involved.

London Ambulance Service dispatch software failure (1992)

When a flawed software design meets improper handling and execution, catastrophe may follow, especially when this happens within institutions dedicated to emergency response.

On October 26, 1992, the London Ambulance Service introduced a computer-aided dispatch (CAD) system intended to automate call-taking, patient location, and ambulance mobilization. Soon after launch, however, the system suffered extensive problems — queued calls were lost, status messages failed to update reliably, and dispatchers struggled to allocate vehicles — and crashed, prompting partial reversion to manual procedures after just one day. Unfortunately, due to the nature of the service and the scope of its service area, even this brief window of problematic computerization had serious consequences: Up to 46 people died what could have been preventable deaths, had ambulances reached them in a timely manner.

Following these and the severe response delays across the capital, the service's chief executive, John Wilby, tendered his resignation. Days later, an external inquiry was launched, as well as an immediate increase in control-room staffing support. The investigation found that because the LAS served millions of residents with hundreds of vehicles, when the new system attempted to computerize nearly every step (rather than incrementally supporting dispatchers), it couldn't reliably handle all of them, which magnified the unexpected weak points in the software.

USS John S. McCain collided with an oil tanker (2017)

Before dawn on August 21, 2017, the destroyer USS John S. McCain collided with the oil tanker Alnic MC off the coast of Singapore, in one of the world's busiest sea lanes near the Straits of Malacca. The Navy ship sustained a large hole on its port (left) side hull. Initially reported as missing, 10 sailors from the U.S. Navy perished due to the collision.

Subsequent investigations by the Navy and the National Transportation Safety Board (NTSB) found that a series of configuration and control errors on the ship's integrated bridge and navigation system preceded the loss of steering minutes before impact. One year before the incident, the USS John S. McCain had received a touch-screen steering and thrust control retrofit. The interface centralized many functions and allowed complex mode changes; this upgrade, intended to make things easier, ended up becoming a source of confusion for stressed operators (who also received insufficient training on using the new system). Moments before the crash, steering and throttle control were split between stations without clear crew understanding, and the ship began an uncommanded turn to port that the bridge team could not quickly override or counter.

These findings prompted some senior officers to be charged with negligent homicide; some commanders were dismissed from the Navy. In addition to crew lapses and training shortfalls, the NTSB also concluded the touch-screen system's design increased the likelihood of operator error. Two years later, the Navy moved to re-introduce physical throttles and simplify controls across the class.

U.K. Post Office computer system glitch (2000s)

Fujitsu's Horizon system, introduced in U.K. Post Office branches in 1999 to computerize its accounting, generated unexplained shortfalls that the Post Office routinely treated as evidence of theft or fraud by subpostmasters. From 1999 to 2015, nearly a thousand cases of suspected theft and false accounting were brought to court; as a result, many subpostmasters were convicted and received jail time.

Beyond the legal repercussions, these cases also had severe negative impacts on the personal lives of the accused. Some found themselves in financial ruin after having to use their own funds to make up for the missing money; some suffered the breakdown of their marriages, while others spiraled into substance misuse. Worse, at least 13 people are believed to have taken their own lives in relation to the scandal.

Over 500 subpostmasters came together in 2017 to file a counter-case against the Post Office. In 2019, the courts found that the system's errors could indeed cause the very discrepancies that were blamed on the subpostmasters. As of June 2025, the Post Office has compensated more than 7,300 subpostmasters.

Interestingly, some of the bugs were detected years before. In 2006, a subpostmaster detected a glitch in which non-physical stamp sales would be accidentally doubled, creating an accounting discrepancy. He brought this to the attention of the Post Office and Fujitsu, but was told that no action would be taken to correct the error, and that the subpostmaster network would not be informed about it. (For more post office scandals from around the world, here are some of the most controversial postage stamps ever.)

If you or someone you know is struggling or in crisis, help is available. Call or text 988 or chat 988lifeline.org

A U.S. Navy cruiser shot down a passenger plane (1998)

The USS Vincennes was one of the guided missile cruisers deployed by the United States during the Iran–Iraq War to protect oil tankers and other ships from becoming accidental casualties in the crossfire between the two warring countries. Unfortunately, due in part to a glitch made possible by less-than-optimal software design, the warship itself ended up causing the deaths of 290 civilians, in what might have been an avoidable tragedy.

On July 3, 1988, the U.S. Navy cruiser fired two surface-to-air missiles that destroyed Iran Air Flight 655, an Airbus A300, mere minutes after it lifted off from Iran's Bandar Abbas International Airport. Among the Dubai-bound plane's passengers and crew, none survived. Findings from the subsequent investigation of the incident revealed that at the time, the Vincennes was engaged in combat with Iranian vessels in the Strait of Hormuz. Under the command of Captain William C. Rogers III, a seasoned military man with a reputation for aggressive tactics, the Vincennes misidentified the ascending civilian airliner as a descending F-14.

While the tragedy could be attributed to a variety of reasons (including the crew's lack of familiarity with the ship's Aegis fire control system), there were issues concerning the ship's combat information center displays. Crucially, the Identification, Friend or Foe (IFF) reading reported Flight 655 as Mode II (military) instead of Mode III (civilian), a result of the Vincennes' radar picking up a signal from the wrong tracking gate and thus giving an incorrect assessment.

Pandemonium at the Heathrow Terminal 5 opening (2008)

Flight cancellations and lost baggage are among the many horror stories that can happen to anyone at an airport. But when hundreds or even thousands of these cases happen simultaneously, what would typically be an internet rant or an irritated customer service story becomes a full-blown catastrophe.

In March 2008, Heathrow Airport, the U.K.'s largest airport, proudly unveiled its then-new Terminal 5, which operated with a supposedly cutting-edge baggage handling system. Unfortunately, it quickly became clear that the system simply couldn't keep up with actual, real-time passenger loads. From its opening day, baggage belts and sorting logic suffered repeated breakdowns, staff struggled with unfamiliar procedures, passengers found themselves stuck in line at the departure halls, and planes left without correctly loaded luggage. Nearly 250 flights were canceled just half a week into Terminal 5's inaugural operations, and by the end of the month, nearly 30,000 bags had to be placed in temporary storage and returned to their angry owners. Worse, the process of returning all the bags had to be done manually, since the airport staff couldn't even use the system for reprocessing and rescreening the luggage.

The entire situation understandably became a colossal embarrassment for airport staff and government officials alike. It reached the point where, in order to regain the confidence of the public five months after Terminal 5 opened, British Airways found itself running advertisements featuring the tagline "Terminal 5 is working."

The fall of Mt. Gox (2011 and 2014)

Among cryptocurrency enthusiasts, Mt. Gox is perhaps best known for two things: its reputation for being, at one point, the largest Bitcoin exchange in the world, handling over 70% of global Bitcoin transactions – and its catastrophic downfall, brought about by not one, but two software-related disasters.

First, in October 2011, a programming mistake in transaction-handling logic caused the exchange to create outputs that were unspendable. At least 2,609 Bitcoins were effectively stranded due to a subtle error in how change was constructed; in other words, because the code produced invalid conditions, the coins could never be redeemed. This caused an estimated loss amounting to $8,000 (more than $315 million in today's Bitcoin prices).

Fast forward to February 2014: Mt. Gox announced that it had lost roughly 850,000 bitcoins due to a combination of hacking and internal weaknesses. About 750,000 of these lost coins belonged to customers, and 100,000 belonged to the exchange. At the time, the total value of the lost coins was pegged at around $480 million. The company's CEO, Mark Karpeles, publicly apologized, as the company filed for bankruptcy protection in Japan, where it was founded just four years earlier. By the end of the month, Mt. Gox's operations ceased. The next month, the company announced that it found about 200,000 bitcoins in an old Bitcoin wallet. However, the sheer scale of the shortfall translated into years of restitution efforts; as of August 2025, many of the creditors are still awaiting their payouts. (Read about the untold truth of Bitcoin.)

Ethiopian Airlines Flight 302 crash (2019)

On March 10, 2019, Ethiopian Airlines Flight 302, a Boeing 737 MAX 8 bound for Nairobi, crashed shortly after takeoff from Addis Ababa-Bole Airport in Ethiopia. None of its 149 passengers and eight crew members survived.

Online air traffic reports stated that almost as soon as it began its flight, the plane exhibited an unstable vertical speed. The findings of the accident investigation report supported this, stating that the plane took a 33,000 ft/min nosedive due to repeated erroneous airplane-nose-down commands executed by the plane's Maneuvering Characteristics Augmentation System (MCAS) and the unprompted left-right deviation of the Angle of Attack (AOA) values seconds after takeoff.

Tragic enough on its own, the incident was made worse by the fact that it happened just five months after another 737 MAX 8 plane, Lion Air Flight 610, crashed in Indonesia and killed its 189 passengers and crew. This prompted a worldwide grounding of the plane model.

Families of the victims in the Flight 302 crash pursued compensation from Boeing under U.S. law. In 2019, two years after the disaster, the manufacturer accepted full responsibility for it, acknowledging that the particular model had an "unsafe condition" and agreeing not to attribute blame to the airline or pilots. It's worth noting that at this point, the 737 MAX's design had already been updated, and the model had been deemed fit to return to active service.

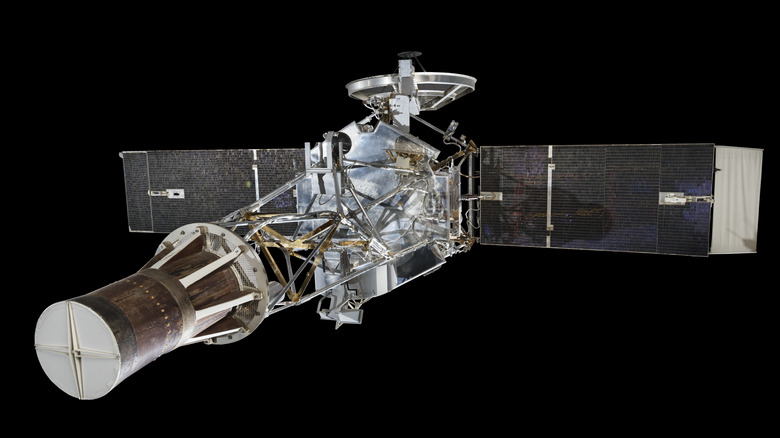

Mariner 1 Spacecraft veered off course (1962)

Ask someone to name some expensive space gadgets that failed miserably, and there's a decent chance the Mariner 1 space probe will make their list. Aside from its significance in U.S. space history, it's also frequently cited as an example of how even the smallest errors or inconsistencies can throw a wrench into the most well-laid high-tech plans.

Intended to be the first American attempt at a Venus flyby mission, the space probe was launched from Cape Canaveral on July 22, 1962. But moments after it took off, experts noticed that it had already veered off course. Realizing that the spacecraft could come hurtling back down to Earth and potentially cause loss of life somewhere near the North Atlantic, an engineer used its remote detonation feature, obliterating it about 294 seconds post-launch.

The cause of this incredibly costly (to the tune of at least $18.5 million, or over $194 million today) mistake? Aside from a malfunction attributed to the guidance antenna on the launch vehicle, an equation in the software had a missing overbar (R̅) over the radius symbol (R). This simple typographical error meant that the spacecraft's software did not account for variations in velocity correctly, leading to wildly incorrect on-the-fly course corrections and an erratic flight path.

An interesting footnote: News outlets at the time mistakenly reported this as a missing hyphen (perhaps because more people are familiar with hyphens than overbars), creating a longstanding misconception about the curious cause of Mariner 1's failure.