Famous Scientific Theories That Were Proven Wrong

Science is a beautiful thing. It's this constant search for answers, for ways to explain what's going on in this wild world, for a better understanding of just about anything. Trying to learn about what's what in current scientific research is sometimes painfully mind-boggling, but it's also incredible that people are out there, coming up with these insane explanations for why things do what.

But science isn't all about getting the answers right on the first try. It's more about trial-and-error and new questions, which means that humanity has seen its fair share of debunked theories and hypotheses over the years. And there are a lot more than just the infamous theory that the Earth is flat. Of course, that one is pretty well-known, but every field of science has its own subset of abandoned theories (and, for that matter, current theories which may someday join those ranks — it may just be a question of time). A lot of them are pretty wild, some are really complicated, but all of them played some part in the development of scientific knowledge as it is now.

Really, there are tons of these, but here's a little list of a few that are particularly interesting.

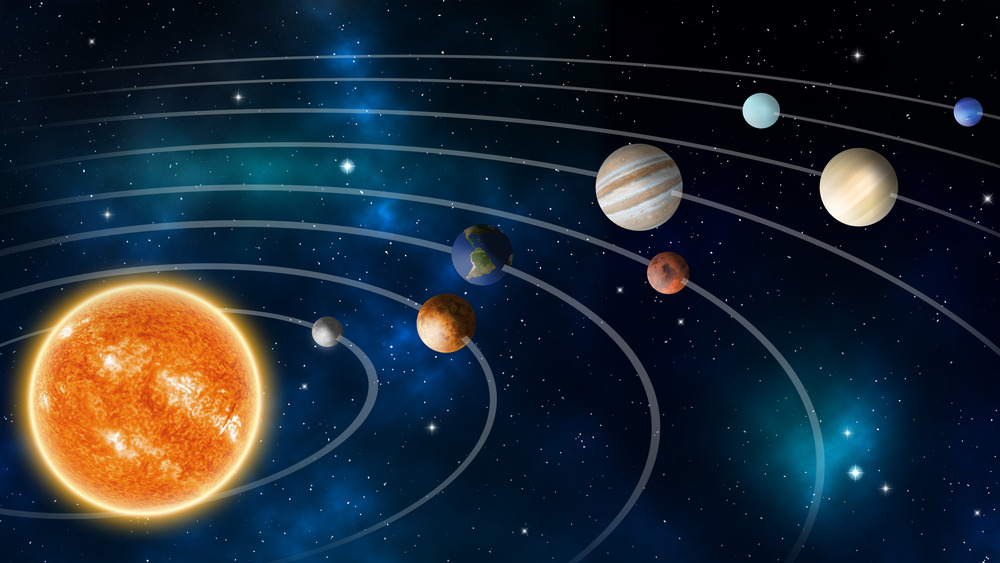

The Earth-centered universe

Back in antiquity, the understanding of the universe wasn't exactly the same as what it is now, but there were still theories to explain it. One of the more well-known theories was called the geocentric model; there were a few variants on it, though Britannica says that the most detailed one comes from Ptolemy. In general, though, geocentric models are exactly what the name implies: they put the Earth at the center of the solar system (or universe, depending).

But there were problems with this theory, things it couldn't really explain, like the fact that the planets — which usually move in one direction, in a straight line across the sky — would sometimes just reverse direction and go backwards for a bit, as EarthSky explains. Mars would do that on occasion, something called retrograde motion, and it didn't fit into a geocentric theory. All the same, defendants of the geocentric model tried to make do, making their model even more complex and saying that each of the planets circled around another point, while still circling the Earth. Basically, more circles fixed the problem.

But there was a simpler solution: a sun-centered solar system (via Britannica). It fixed problems like retrograde motion (the how is sort of complicated, but it suffices to say that the geometry works), and the theory had actually been around since the 5th century BC, though it wouldn't really catch on until Nicolaus Copernicus in the 16th century.

The four humours

It might be accurate to say that early medicine was rather humorous. But not humorous like "funny." Humorous as in, medicine actually revolved around the "four humours." The U.S. National Library of Medicine explains those four humours: black bile, phlegm, yellow bile, and blood. Basically, they're four liquids that ancient Greek physicians believed all humans had inside of them, each with their own properties. Black bile and phlegm were cold, while blood and yellow bile were hot; black and yellow bile were dry, while phlegm and blood were moist.

The four humours were then responsible for all manners of properties in people, ranging from age to temperament. But it was fairly dynamic, the humours interacting with each other, relative levels accounting for a person's mood, and those levels themselves changing because of factors like the season. And that dynamic nature was why the four humours were used as diagnostic tools. According to The Legacy of Humoral Medicine, an imbalance in the humours brought about various diseases. For example, winter created an increase in phlegm, and that cold, moist liquid led to diseases like pneumonia. So then a doctor's job was to restore the natural balance of a person's humours, whether through diet, exercise, or directly removing the liquid from the body.

Obviously, this isn't how medicine is still treated. Not even close. But it did stem from the idea that disease could be explained via natural causes rather than supernatural ones — an important step forward.

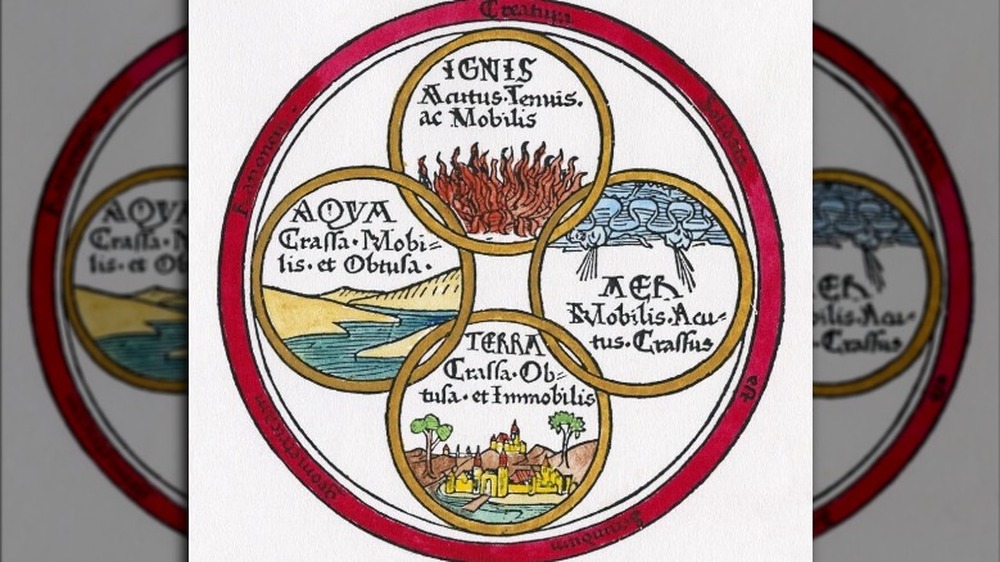

The four elements

Earth, air, fire, and water. Mentioning those four elements probably brings to mind some sort of elemental-based magic system (or the opening to Avatar: The Last Airbender), but think about that wording. We all call them the four elements, even though that word — elements — is also really heavily tied to, say, the periodic table of the elements. And that's no coincidence, because the ancient Greeks thought of fire as an element in the same way we think of hydrogen as one.

In all fairness, the idea of the four elements didn't exactly originate in ancient Greece; they can be found in other civilizations, too. But to keep things simple, the Greeks took those four elements and gave them each properties: fire was hot and dry, water was cold and wet, etc. (They also included a fifth element — the aether — which had to do with divine bodies, like stars, but let's stay earth-bound for now). Since those elements had their own properties, they could be combined together to explain qualities of other objects. According to World History Encyclopedia, fire and earth made things dry, but air and fire made them hot. Based on that, the idea followed that the four elements made up everything.

And while the general idea holds some weight, the details have definitely been adjusted over time. Now, an Intro to Chemistry class is enough to know that atoms of elements on the periodic table make up everything (via Compound Chem).

Phlogiston: the 'burning element'

Combustion is kind of a weird little process. How exactly does it work? There's fire, and it's hot, but what's actually going on?

So, combustion and burning is just a chemical process, and Chemistry for Liberal Studies – Forensic Academy explains pretty well. All it needs is fuel (the thing that burns and is generally, mostly made of carbon and hydrogen), oxygen, and some kind of spark. The fuel and oxygen are the starting materials, but just leaving those two things together won't do anything — gasoline spilled on the floor doesn't immediately ignite. There needs to be some energy to get the reaction kick-started, which can come from heat and electricity (among other things). When the reaction gets going, you end up with carbon dioxide and water vapor, but creating those products also releases excess energy — heat and light, better known as fire.

But all those details weren't readily available in the past, and so scientists settled on something called "phlogiston." Britannica describes it as the combustible part of a material; any time something is burned (or when metal rusts), then phlogiston is released, leaving behind ashes. That held up for a while, but not long. Rusting metal was heavier than the starting metal, which didn't make sense if phlogiston was lost, and by the late 1700s, Antoine Lavoisier proved that oxygen, not phlogiston, was involved in combustion and rusting. The phlogiston concept didn't stick around much longer after that.

The miasma theory of disease

The spread of disease is something that's pretty much baffled humanity for a long time, and until the mid- to late-1800s, most people believed in the miasma theory. Miasmas refer to poisonous gases and "corrupted" air, which people believed to cause disease at least as far back as classical Greece (via Encyclopedia). Those poisonous gases came from places like rotting vegetation or decaying carcasses; inhaling them would end up subjecting a person to disease, as History of the Miasma Theory of Disease says. And how could you tell when it was poisonous? Well, it smelled foul (more or less how you'd imagine a rotting corpse would smell).

This belief lasted for a really long time, and a decent amount of medical science was based around it; malaria, which the miasma theory accounted for, literally translates to "bad air." It ended up playing into the cholera epidemics that hit Victorian London around the 1800s (via Science Museum). With people crowded into slums and surrounded by waste, all amidst rapid industrialization, officials who believed in the miasma theory set about to improve sanitation and remove that waste, therefore removing the foul smells coming from them.

Obviously, better sanitation helped (and is just, you know, generally a pretty good thing), but it didn't solve the problem. The disease also seemed to spread through water, and pass from infected people to their healthy companions. That's where contagion and germ theory ended up shining, and the rest is history.

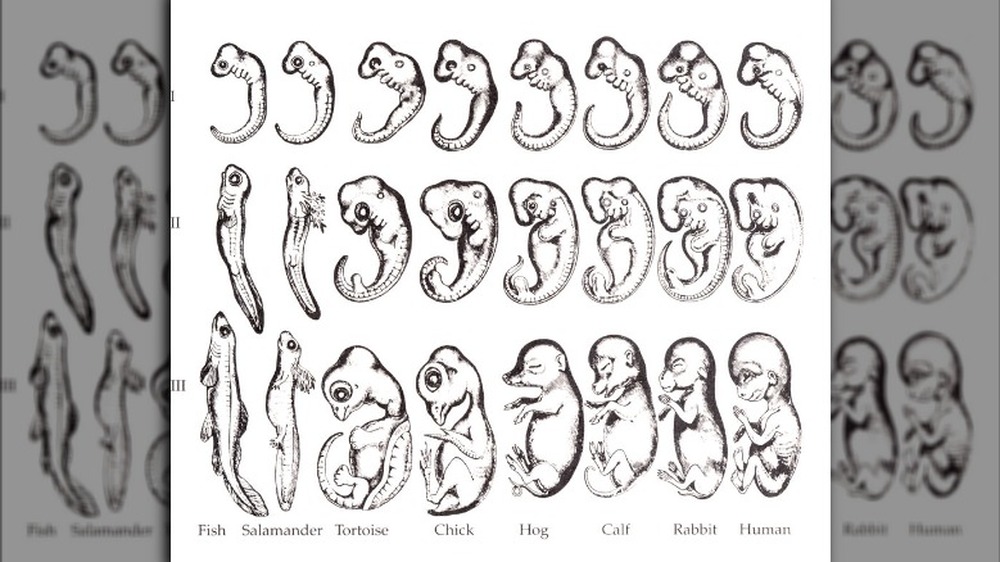

The 'fish stage' of human development

Alright, this one definitely sounds a little weird, so here's a more official sounding name that encompasses what's going on: the Meckel-Serres Law (via The Embryo Project Encyclopedia). And that law has quite the backstory. Its roots come from Aristotle and his idea of scala naturae — a hierarchy of living beings based on complexity. Humans are at the top, and animals fall into tiers underneath. Johann Meckel and Antoine Serres took that idea and ran with it. They began studying embryos, looking for proof that more complex beings (like humans) passed through stages that look like simpler beings (like fish and reptiles).

And they did seem to find their evidence. The organs of younger embryos of "higher order" beings more closely resembled those of "lower order" ones, and the human brain seemed much the same, resembling that of a fish and reptiles before becoming closer to a fully developed human brain. They used that evidence to explain deformities as instances where development stopped at those lower stages, and then the discovery of slits like gills in the necks of young human embryos seemed to cement their theory (hence, "fish stage").

But other biologists disagreed with this strict hierarchy, and, well, observational evidence isn't surefire proof. Darwin's theory of evolution and common descent argued that humans and fish share a common ancestor — and, therefore, also share some DNA (via Durham University) — which could explain the similarities in embryos just as well.

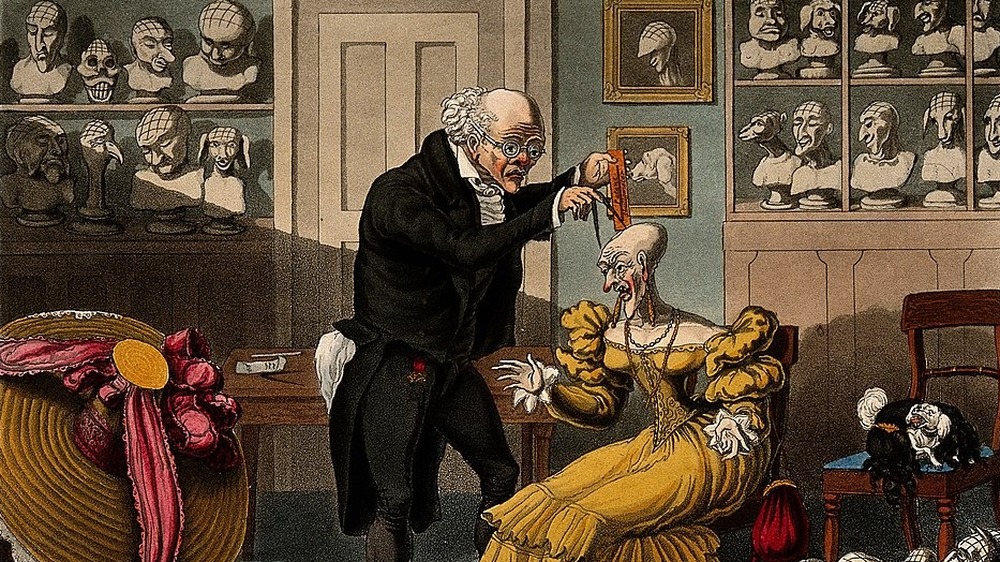

The study of phrenology

In general, phrenology is a pseudoscience which links personality traits with different brain "organs," according to ThoughtCo. Sure, this doesn't sound all that far from modern day neuroscience, but the personality traits linked to each organ are pretty different, including "language" and "memory," but also "sense of religion" and "metaphysical lucidity." And on top of that, a big part of phrenology had to do with doing "readings." A practitioner would feel the bumps on a person's skull. The location of those bumps corresponded to which organs were stronger, and thus more present in someone's personality.

Yeah, that doesn't sound so much like modern neuroscience, and phrenology was already heavily criticized at the time, but it became a popular social fad. People would use these readings for career and romantic advice, and women changed their hairstyles to show off flattering phrenological features. (On the flip side, it also led to less-flattering and problematic racial stereotypes and assumptions).

That said, though, Smithsonian Magazine explains that modern neuroscience and psychology can tip its hat to phrenology. Yes, it's pseudoscience based in very little fact, but it was onto something. It asserted that the brain played a part in behavior, but also that mental functions were localized. Language was dealt with in one area, and decision-making in another. The brain is broken up into distinct areas and systems, so in a general sense, the phrenologists kind of had the right idea.

The many atomic models

When it comes to the atomic model, there isn't just one theory that's been debunked; it's more like a long history of theories, with even the current theories still being just that: theories.

The story starts in ancient Greece, where some early scientists believed matter was made up of indivisible particles. The Greek "atomos" actually means indivisible, and so John Dalton drew on that, theorizing that atoms were solid, unbreakable spheres (via Compound Chem). The thing is, that's not true. Atoms are made of smaller particles; J.J. Thomson discovered negatively charged electrons, which he presumed floated around in a positively charged cloud (which he needed to balance out the negative charge). But then that positive charge got its own particle — the proton — which was assumed to live at the very center of the atom, inside the nucleus. This is actually where we get the picture we usually see of atoms, with electrons orbiting around the nucleus in the same sort of way that planets orbit the sun.

But the atomic model as it's understood now is...pretty weird. Basically, electrons don't move in nice orbits; they move more like waves, flinging themselves around in clouds centered around the nucleus. Those clouds can be shaped the way you'd expect (like spheres), or in ways you'd less expect (like dumbbells and four-leaf clovers), and just represent the areas where you'd probably find an electron, because no one can ever know for sure (via LibreTexts). Yeah, it's complicated.

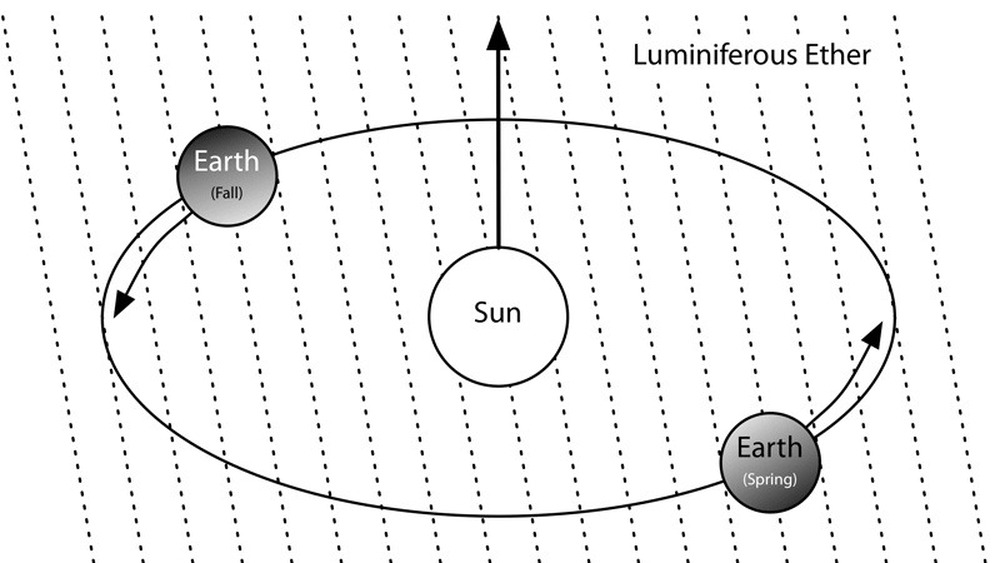

Luminiferous aether

Light seems like one of those things that'd be pretty easy to explain. After all, we're surrounded by it all the time, and it's a staple of life itself, but when scientists study it, things get confusing. One of the questions surrounding light was: "how does it move through space?" This question, according to Lockhaven, came from the knowledge that sound waves needed a medium to travel through, namely air. Sound waves couldn't pass through a vacuum, but light waves could. So, if sound waves needed a medium, then didn't light waves as well?

Luminiferous aether became the answer. It was the medium that light travelled through, an invisible substance all around us that couldn't be felt the way air could, but just existed. It was the stuff that waved when light moved, the same way that air waved when sound moved. With that, scientists went on to measure the "aether wind." Michael Fowler from the University of Virginia explains that, under the assumption of the aether, the Earth moves through it while orbiting the sun. Therefore, the aether should be felt rushing past the Earth (like if you ran your hand through water), and light should be affected by whether it was moving "upstream" or "downstream" along the moving aether.

Other experiments also seemed to contradict the aether theory, and, long story short, Einstein's Theory of General Relativity ended up providing an explanation that didn't require an aether, and the theory has been largely forgotten.

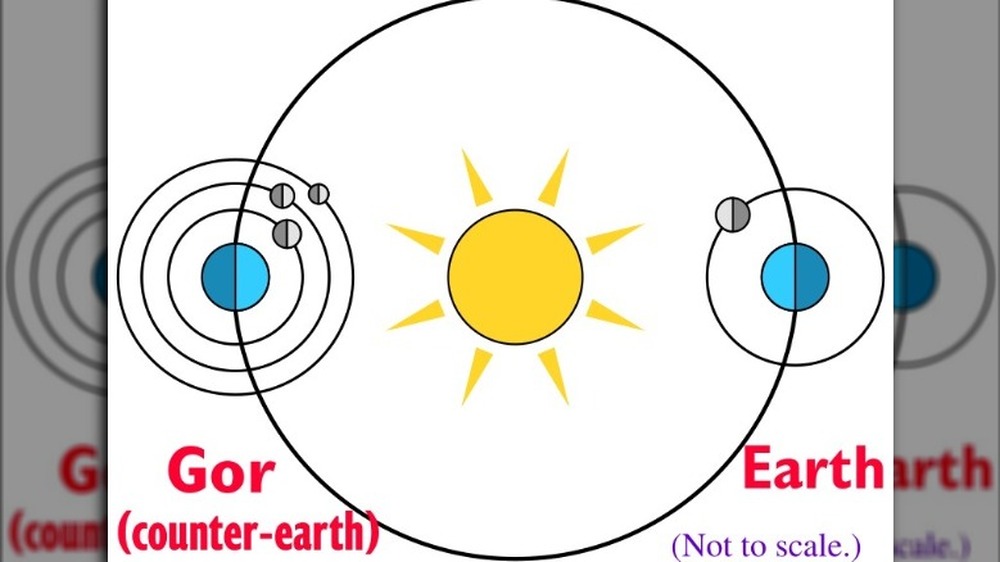

Predicted planets

Honestly, there are quite a few predicted planets, so let's just talk a couple.

One comes from ancient Greece: the counter-Earth. The Stanford Encyclopedia of Philosophy explains that the counter-Earth was inserted into the Pythagorean astronomical system, which had all the planets orbiting around a Central Fire (not to be mistaken with the Sun, which also orbited the Central Fire). Counter-Earth's orbit was smaller than Earth's, and the Earth rotated such that it was always turned away from it (hence why we can't see it). As for why it was theorized, that's hard to say. Aristotle assumed it was to bring the number of celestial bodies up to ten (the Pythagoreans liked the number ten), but others guess it was meant to explain lunar eclipses.

Another proposed planet was Vulcan, and no, not the Star Trek Vulcan. This Vulcan was a planet thought to exist inside of Mercury's orbit, super close to the Sun. According to Business Insider, Mercury's orbit around the Sun "wobbles," shifting a little every time, and gravity-related math said that a small planet closer to the Sun than Mercury could cause that effect. But no one could see this thing, and it was relativity to the rescue. Without getting into the nitty-gritty, the Sun bends space; think of what happens when you put a bowling ball on a trampoline. Same concept. So basically, Mercury's orbit acts weird because the space around it is weird and warped, not because of another planet.

A static, steady universe

The universe, how it works, and what's going to happen to it — all of that is still a big question mark, but there are some things that scientists generally agree probably aren't true.

Einstein himself fathered one of those theories, saying that he believed the universe to be static (via Phys.org). He thought the universe on the whole was a given size — it wasn't growing or expanding. Even when his own theory of general relativity said that gravity should pull everything in, he introduced an entirely new concept — the cosmological constant — just to act against gravity and perfectly balance it out. But that didn't hold up. Einstein's own theories worked in an expanding universe, and Edwin Hubble found that galaxies were moving away from each other. Eventually, Einstein came to accept the expanding universe theory.

Then there's the Steady State Hypothesis, which Universe Today explains as the belief that the density of matter in the universe is unchanging. Matter is constantly being created in order to keep things looking the same, and the universe itself has no beginning or end. But the Steady State Hypothesis led to incorrect assumptions, and then its main competitor, the Big Bang Theory, started to take off. Scientists found the Cosmic Microwave Background — remnant radiation predicted by the Big Bang Theory — and later found that the expansion of the universe was accelerating, not constant. Now, most scientists look to the Big Bang as the best explanation for the cosmos.