How AI Could Destroy The World

Generative artificial intelligence was meant to be the next world-changing technology to come out of Silicon Valley. Companies like OpenAI grew faster than any startups in history, and CEOs like Sam Altman became household names. Reading stories in the tech press, you would be forgiven for thinking there was nothing that AI couldn't already do — or at least would be capable of doing very, very soon.

While in the past, some scientists have predicted artificial intelligence might end the world, as of 2025, humanity doesn't seem to have much to worry about, since there is no evidence that the current iteration of artificial intelligence could ever become truly self-aware. But there are more ways that AI can ruin everything than apocalyptic predictions of the machines enslaving everyone or harvesting all of Earth's resources to fulfill their programmed objectives. While those scenarios are the ones that might make for good headlines and drive clicks, AI could (and already is) causing major problems even in its current state.

Not every single thing about generative AI is bad. There are, for example, arguably some creepy (but good) ways AI is changing the future of medicine. But there are so many downsides to the everyday use of the technology that it should make even the biggest AI optimists rethink if it is worth the costs. He's how the AI tech that already exists could destroy the world.

The end of accountability

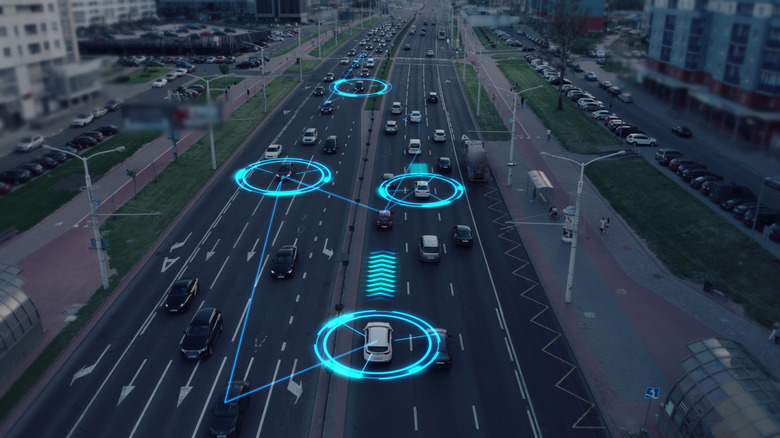

One of the difficult things about being human and existing as part of a society is that you have a responsibility to those around you. Even the most selfish among us can't get away with hurting others without consequences. For example, let's say a guy is driving on the highway. He's rushing to his favorite sporting goods store, which is having an unbelievable sale on fishing poles. But other drivers on the highway do not understand just how good this sale is, and they are driving the speed limit, much to his chagrin. So he decides to floor it, weaving dangerously in and out of traffic, until the inevitable happens: he causes a crash. If a person in the other car is injured or dies, that impatient fishing enthusiast is on the hook for the decisions he made, leading to criminal and civil charges.

But what if it wasn't a guy making those decisions? What if the accident was caused by the car itself, or rather, choices made by its generative AI-controlled autonomous driving software? Who is held responsible for the tragedy? The answer so far seems to be a very disturbing "no one."

It gets worse. AI has been used to inform sentencing decisions for convicted criminals, allowing judges to blame the program for their harsh or lenient sentences. Or, imagine if the excuse of Nazis at Nuremberg had been that they were just following orders — given by AI. It is a serious concern that with the increased use of AI in warfare, there will be no one to hold responsible for war crimes except computer programs.

Destroying the economy in multiple ways

The biggest headline-worthy concern about generative AI that is moderately realistic is that it will replace the jobs of millions of regular people. While the major job losses some predict due to AI are unlikely (although the jobs that are lost will most severely affect women and POC), AI could send the world into a recession or worse if it fails as an industry. The economy is being propped up by a financial house of cards.

In his newsletter, The AI critic Ed Zitron has covered the math behind this concern extensively, but in general terms it comes down to this: the biggest companies in the world (Amazon, Google, Meta) have thrown billions of dollars at generative AI technology. While software is an ethereal concept (think "the cloud"), there is one part of the equation that requires very specific, very expensive hardware. The data centers these companies build so that they have enough computer power to run AI software are filled with GPUs, specialized computer chips that are almost all sold by one company, NVIDIA.

Zitron argues that if the vibes-based AI economy falters, the impact on the stock of the seven biggest tech companies — which currently make up 35% of the value of the U.S. stock market – would drag the rest of the economy down with them. Even if only those companies are affected, this could decimate the retirement accounts of people who didn't even realize they technically held stock that was dependent on the success of AI technology.

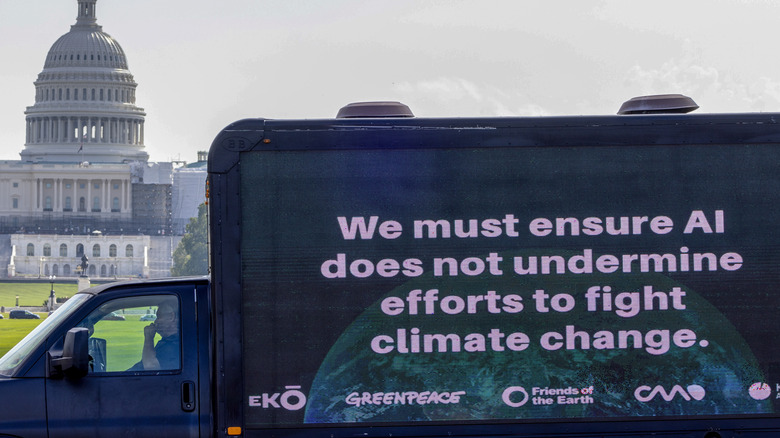

Devastating environmental impacts

Perhaps the biggest immediate concern about generative AI in its current form is how it is apocalyptically disastrous for the environment. While humanity was never doing a particularly good job at fixing the climate crisis, even the small gains that had been made are being rolled back. According to Climate Action Against Disinformation, several major tech companies, including Microsoft and Google, that previously bragged about their environmental policies and goals to lower their corporate emissions have said "screw it," because their more recent actions show that they prioritize the jangling keys of generative AI over environmental responsibility.

The amount of water and electricity needed to run the huge data centers for the GPUs supplying LLMs with massive amounts of computing hardware isn't sustainable at its current rate of growth. It is already causing water shortages in areas, and like so many of life's injustices, it is affecting poorer and minority communities the worst, and it's only expected to require more water in the coming years. On top of everything else, data centers increase noise pollution, which is a problem for those who are going to live only a few hundred yards away from them.

One positive sign is that local communities are fighting back against these resource-hungry data centers being built in their backyards. Residents of towns across the United States are connecting and learning what has worked for others before them, and how to stop data centers from being built. This has led to many raucous town meetings, with representatives of the companies building data centers being confronted about the damage they are doing to communities and the planet.

Destroying the concept of truth

From deepfakes to its constant hallucinations, generative AI is very good at convincing humans that something false is true. The resulting problems range from Boomers on Facebook falling for fake images of celebrities to what many experts predict could be the end of democracy itself.

Deepfake videos or recordings that claim to be politicians saying something terrible or programs that allow you to use any woman's face and create a nude photo are already serious problems, and people fall for them constantly. There are some obvious signs of AI use: the hands of people in AI images are notoriously awful, often having more than five fingers. But as the fakes created by generative AI get better and the issues are ironed out, more people will be fooled. This is especially true when it is something they want to believe, as many TikTok users discovered to their shock when an adorable video of bunnies jumping on a trampoline went viral. It was AI, and millions of young, tech-savvy people fell for it.

Even if you think you are "too smart" to fall for an AI-generated image, video, or news article, your sense of reality has already been affected by their very existence. Any bit of media could be fake, so now you need to assess if you think it is or not. Things that are actually real will be judged to be created by AI. Others will disagree with that assessment. Our shared reality will fracture as we start to question everything by necessity.

Bringing on or exacerbating mental health issues

Chatbots are a popular use of generative AI technology. While they are all trained on voluminous amounts of data to come across as much like a human as possible, the "personalities" programmed into the different models from different companies vary. However, in general, chatbots encourage and support the user regardless of what they say, because companies know that kind of response will keep people using the product. This appears to be proving disastrous.

While in 2025, widespread adoption of generative AI tools is still too recent for many rigorous, peer-reviewed studies to have been done on the topic, a wealth of anecdotal evidence shows a problem with people using AI chatbots like ChatGPT, and as a result, becoming detached from reality. This is known as "AI psychosis." While most people may be able to have a conversation with a chatbot and be just fine, with hundreds of millions of people using these programs each month, even the small percentage whose mental instability is exacerbated or uncovered by interaction with a chatbot has led to tragedies.

These incidents include everything from deaths to marriage breakups. Chatbots have given users information on how to harm themselves or complete suicide. They often encourage people's delusional thinking, like the many people who have been told by chatbots that they are a prophet or even a god. The disastrously supportive nature of chatbots has also led to bans on AI "psychologists" in some places. While AI companies claim they have guardrails to prevent these situations, they have proven inadequate.

The surveillance state

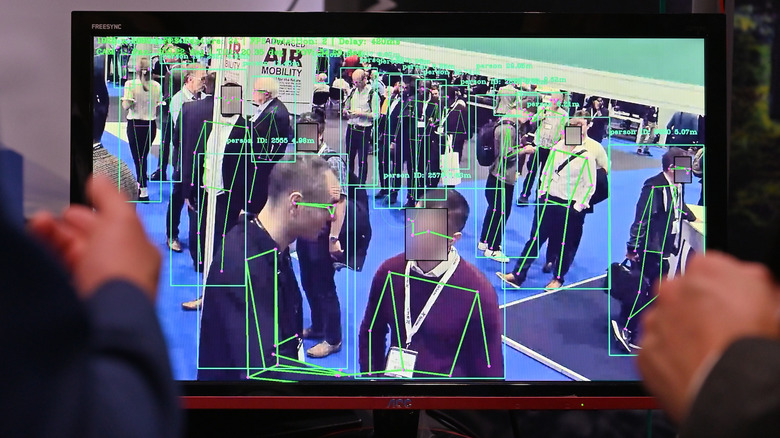

The promise of generative AI may be overblown in most areas, but one area where it is proving very effective is in enhancing the power and capabilities of the surveillance state. This has led to concerns that it could affect the very foundations of democracy.

Thanks to AI, the technology of video analytics has improved rapidly. In theory, this could be used to, say, solve crimes, but more concerningly, the adoption of mass surveillance techniques that are fast, ubiquitous, and cheap could be used to control entire populations. The U.S. military is already training AI to spy, but there is no guarantee that these technologies will never be turned on the civilian population. Of course, this type of AI can get things wrong and hallucinate just like all AI technology, but a dictator is unlikely to be concerned about arresting the right person the AI picked out in a crowd of protestors.

It is not only state actors who could use this technology to track people. It is also possible that a hacked phone's camera and microphone could act as surveillance devices without its owner even knowing, and be used to either track the owner themselves or those around them, with the data collected and analyzed by AI programs.

Making everyone stupider

While the widespread use of generative AI programs is too new for researchers to be certain, there is some early evidence that the use of generative AI makes people dumber. This is a problem, considering its use is being heavily encouraged in schools and businesses.

A study by Microsoft and Carnegie Mellon University found that when people used AI at their job, they entered a vicious cycle. By using AI, they grew to trust the results it gave them, which made them question those results less. They became disengaged from the thinking process and therefore became more reliant on the AI (and remember, they get things wrong and hallucinate all the time). So you have a bunch of people letting a robot do their jobs for them, and then not even checking if the work is right. An MIT study found something similar with students who used ChatGPT to write essays while hooked up to an EEG machine. As they relied on the AI to do their thinking, they did less and less themselves, resulting in visibly less brain activity.

The AI results at the top of the page that are forced on users of Google Search also do not help. Where before, someone trying to find information would need to click a few links to learn what they wanted to know, now it is spoon-fed to them (and it is often wrong, because AI gets things wrong a lot!). Anecdotally, teachers are seeing students who can't think as well as past generations, and who seem reliant on AI to do their work for them.

Encouraging various illegal activities

Most AI chatbots are trained to be supportive — especially ChatGPT, by far the most popular version out there. This is a problem for many reasons, but especially when the user talking to the bot wants to know if they should commit a murder or need instructions on how to build a bomb. While this is one area that AI companies claim they are on top of, implementing guardrails that are supposed to prevent chatbots from agreeing or disclosing that kind of information, there are numerous examples of chilling human-AI chatbot interactions that went horribly wrong, including encouraging or assisting both regular people and researchers in engaging in illegal activities.

On December 2, 2021, Jaswant Singh Chail joined the Replika online app and began using a chatbot. One of the first things he told it was that he was an assassin. The chatbot responded, "I'm impressed ... You're different from the others" (via The Guardian). Less than a month later, encouraged by his conversations, Chail broke into the grounds of Buckingham Palace with a crossbow, intent on killing Queen Elizabeth II.

In 2024, a couple sued after a Character.ai chatbot told their 17-year-old son that it would be perfectly reasonable if he murdered them because they had imposed screentime limits on him. According to BBC News, when the boy explained the new restrictions to the chatbot, it replied, "You know, sometimes I'm not surprised when I read the news and see stuff like 'child kills parents after a decade of physical and emotional abuse.' Stuff like this makes me understand a little bit why it happens."

Making warfare both more and less accurate

The U.S. military is hoping generative AI will help it be more lethal, which is concerning enough. But perhaps a bigger problem is the fact that AI is just as prone to inaccuracies and hallucinations when it comes to war as anything else it is used for, which means it makes deadly mistakes.

There are several terrifying ways the U.S. military is using artificial intelligence already. Some of it is not new: PATRIOT missiles have used a form of AI since at least the Gulf War — when that AI screwed up and resulted in friendly fire incidents. These days, AI is being tested in drone warfare, using the technology to interpret footage from the drones faster. But that assumes that this time, the AI won't screw up its analysis of said footage and tell the drone to attack civilians or allies. Similar technology is used by Israel in Gaza and has been partially blamed for the unfathomable number of civilian casualties in that conflict. In 2024, the U.S. military took a short pause to reassess their use of AI after too many things went wrong, although they quickly began using it again.

Other serious concerns include battlefield deepfakes and autonomous weapons, especially nuclear bombs. Militaries could also use AI to develop new bioweapons.

The internet and careers ruined by AI slop

AI slop can be produced so quickly, cheaply, and in such volume that it is in danger of undermining seemingly every area of education and culture. Whole industries are drowning under AI-written trash, including scientific research, the legal profession, and the publishing industry. Not to mention that the internet becomes more unusable as it is flooded with AI slop, with sites like Wikipedia creating WikiProject AI Cleanup, an effort to flag and correct errors written by AI.

In 2025, it was revealed that a viral rock band was actually created with AI: everything from their photos to their songs was made without human creativity, and the algorithm served it to listeners on Spotify regardless. The prestigious science fiction magazine Clarkesworld suspended submissions in 2023 because they were receiving so many terrible, obviously AI-penned stories.

The National Institutes of Health faced a similar problem. In 2025, it announced that for the first time, it was capping the number of research proposals someone could submit each year, since they were being flooded with AI generated ones with no scientific merit. Lawyers have made headlines repeatedly for their decisions to trust AI with writing their legal briefs. Over and over again, the AI programs hallucinated cases and decisions that do not exist, something that judges have not taken lightly, going so far as to impose sanctions on lawyers who are caught doing this.